Modular nabs $100M for its AI programming language and inference engine - SiliconANGLE

AUGUST 24, 2023

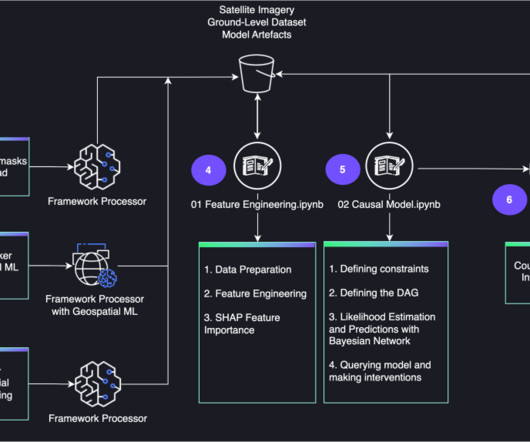

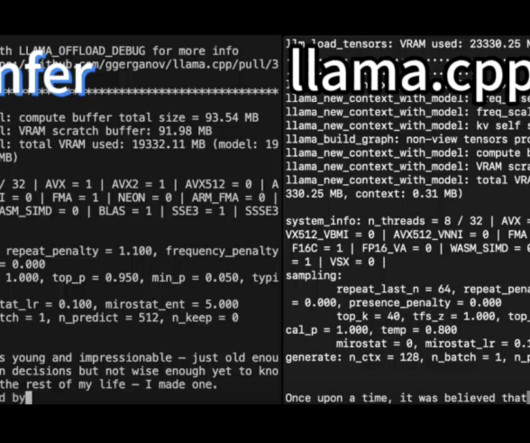

Modular Inc., the creator of a programming language optimized for developing artificial intelligence software, has raised $100 million in fresh funding.General Catalyst led the investment, which w

Let's personalize your content