Spark NLP 5.0: It’s All About That Search!

John Snow Labs

JULY 5, 2023

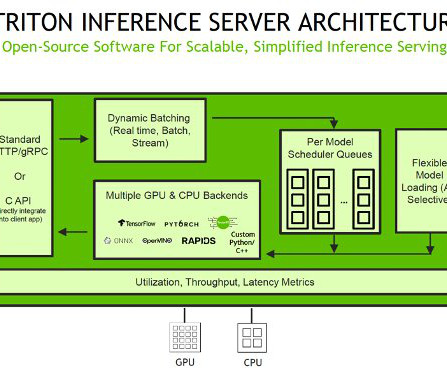

Serving as a high-performance inference engine, ONNX Runtime can handle machine learning models in the ONNX format and has been proven to significantly boost inference performance across a multitude of models. Our integration of ONNX Runtime has already led to substantial improvements when serving our LLM models, including BERT.

Let's personalize your content