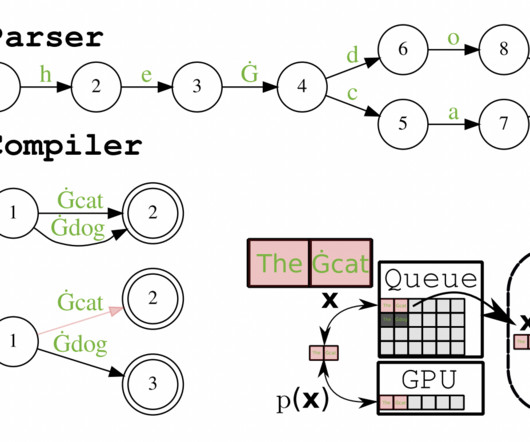

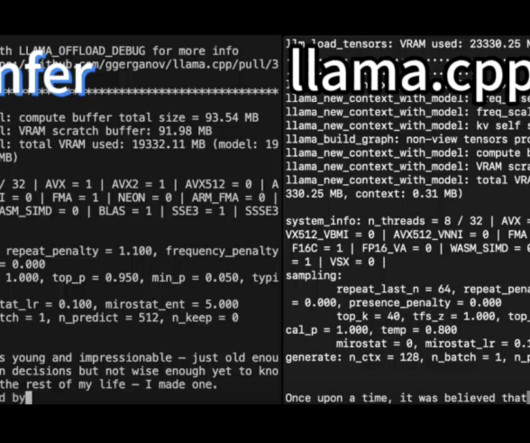

CMU Researchers Introduce ReLM: An AI System For Validating And Querying LLMs Using Standard Regular Expressions

Marktechpost

JUNE 8, 2023

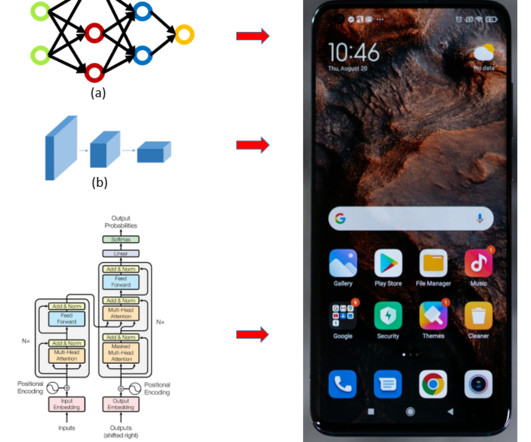

There are rising worries about the potential negative impacts of large language models (LLMs), such as data memorization, bias, and unsuitable language, despite LLMs’ widespread praise for their capacity to generate natural-sounding text.

Let's personalize your content