A Comprehensive Overview of Data Engineering Pipeline Tools

Marktechpost

JUNE 13, 2024

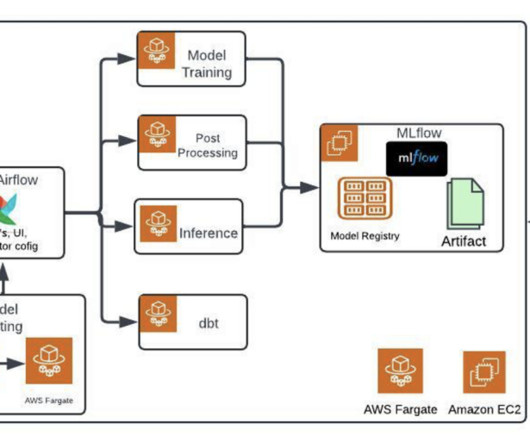

Detailed Examination of Tools Apache Spark: An open-source platform supporting multiple languages (Python, Java, SQL, Scala, and R). It is suitable for distributed and scalable large-scale data processing, providing quick big-data query and analysis capabilities. Weaknesses: Steep learning curve, especially during initial setup.

Let's personalize your content