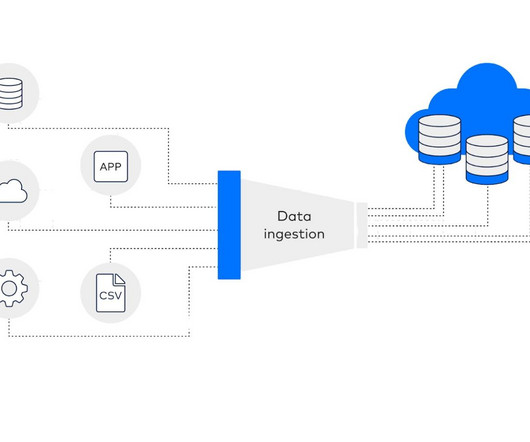

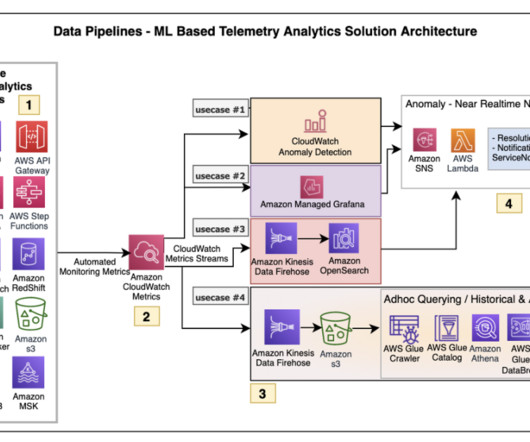

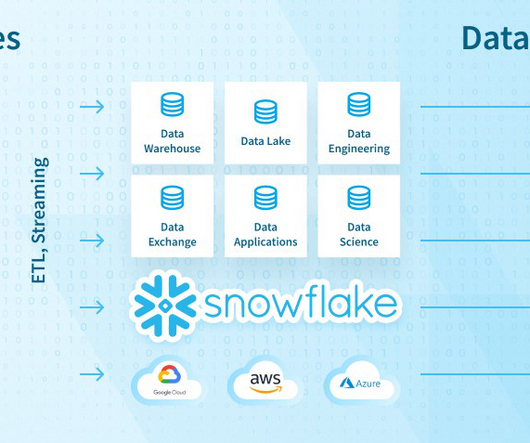

Data Ingestion Featuring AWS

Analytics Vidhya

JUNE 24, 2022

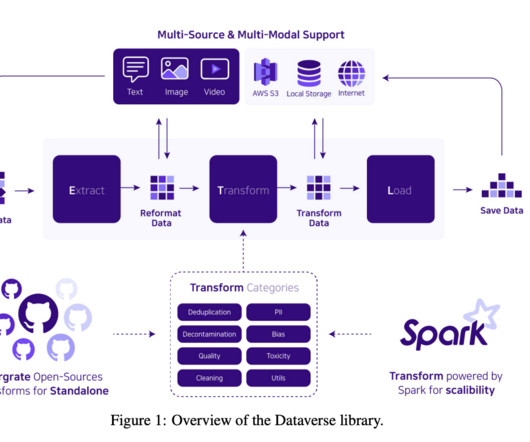

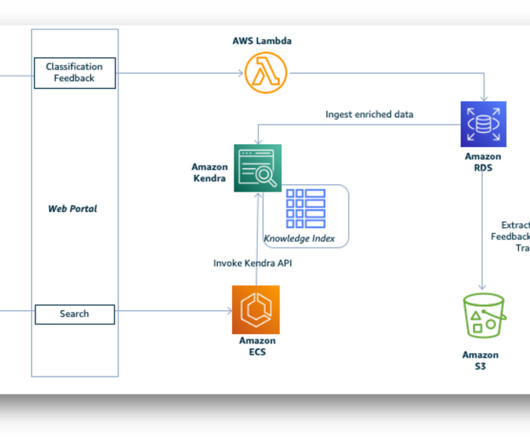

Introduction Big Data is everywhere, and it continues to be a gearing-up topic these days. And Data Ingestion is a process that assists a group or management to make sense of the ever-increasing volume and complexity of data and provide useful insights. This […].

Let's personalize your content