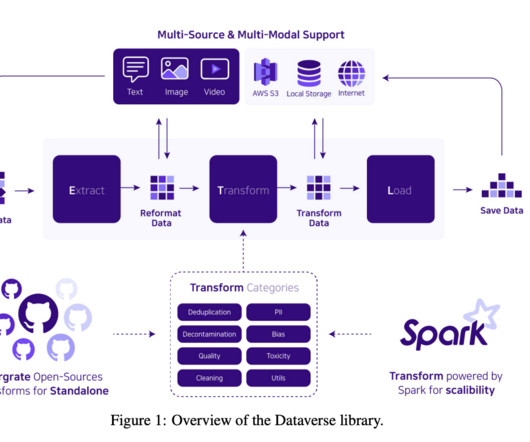

Upstage AI Introduces Dataverse for Addressing Challenges in Data Processing for Large Language Models

Marktechpost

APRIL 1, 2024

Existing research emphasizes the significance of distributed processing and data quality control for enhancing LLMs. Utilizing frameworks like Slurm and Spark enables efficient big data management, while data quality improvements through deduplication, decontamination, and sentence length adjustments refine training datasets.

Let's personalize your content