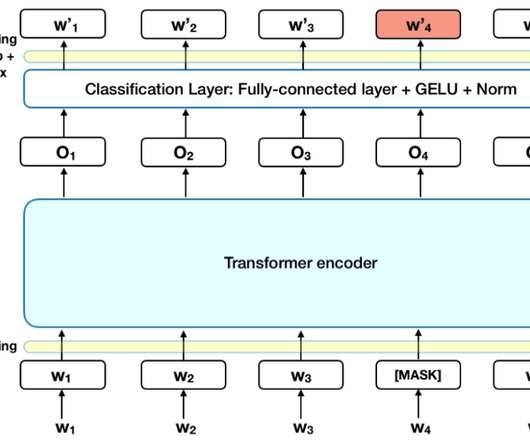

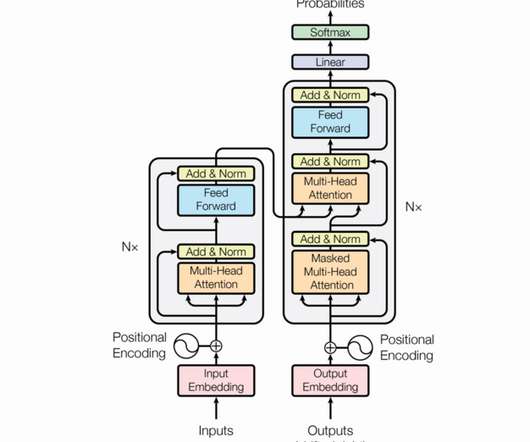

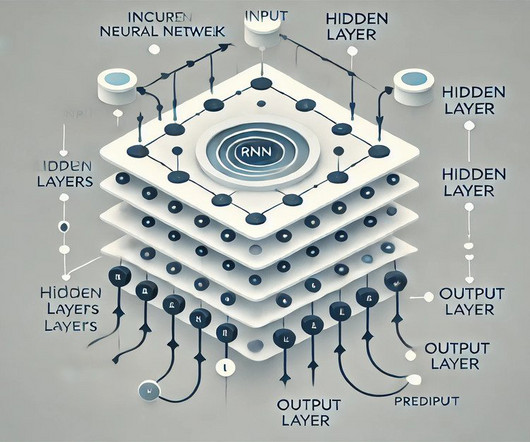

Disaster Tweet Classification using BERT & Neural Network

Analytics Vidhya

DECEMBER 18, 2021

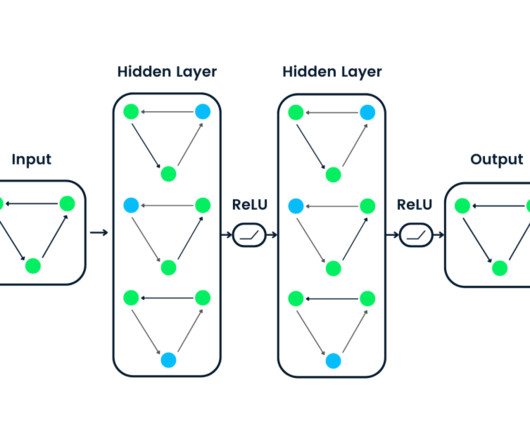

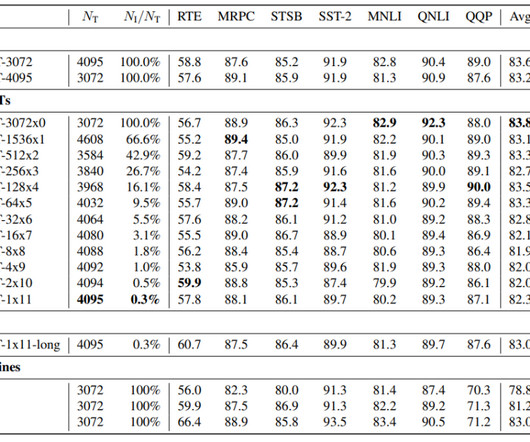

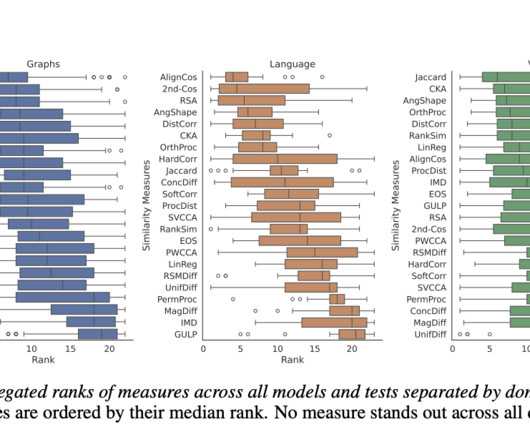

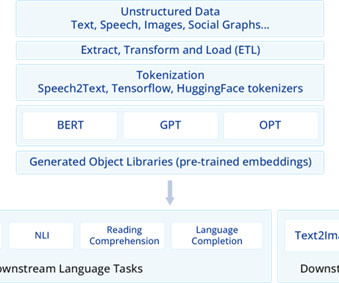

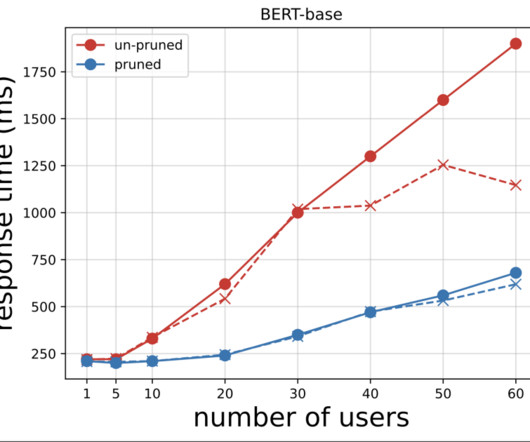

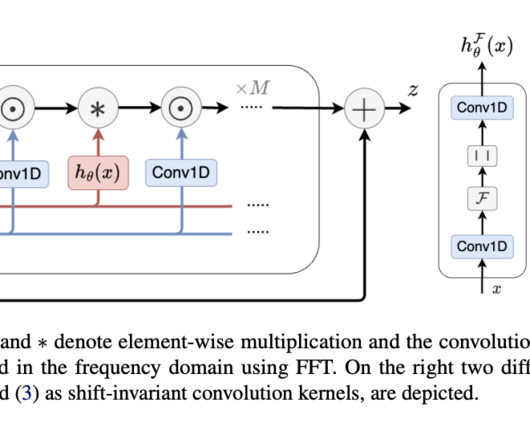

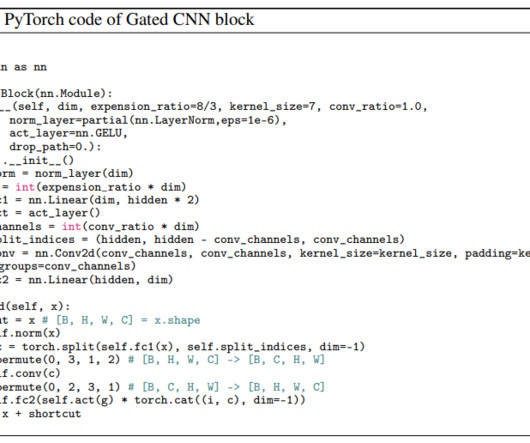

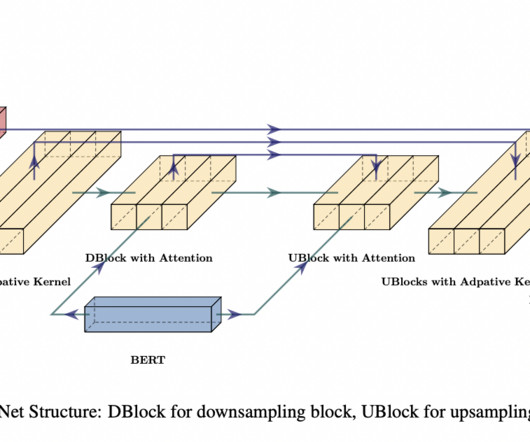

In this article, we are going to use BERT along with a neural […]. The post Disaster Tweet Classification using BERT & Neural Network appeared first on Analytics Vidhya.

Let's personalize your content