Beyond ChatGPT; AI Agent: A New World of Workers

Unite.AI

AUGUST 28, 2023

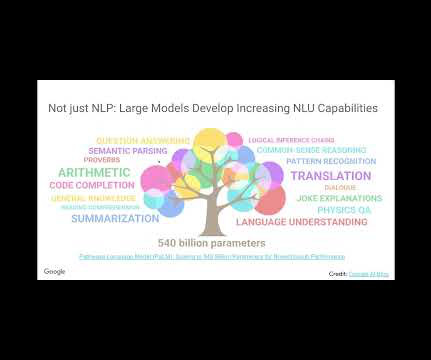

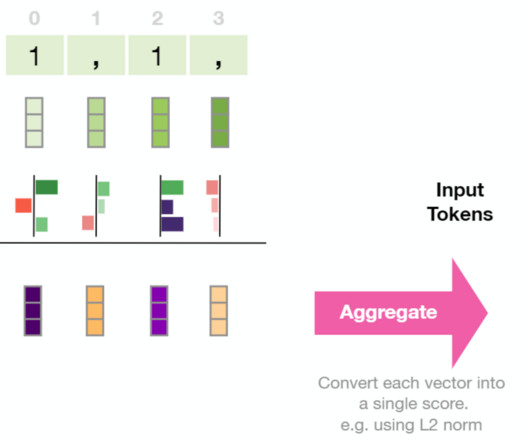

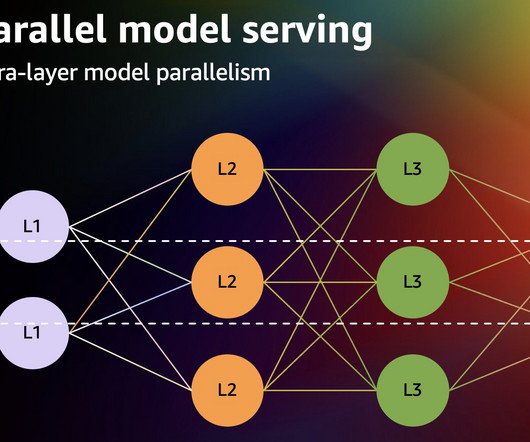

Neural Networks & Deep Learning : Neural networks marked a turning point, mimicking human brain functions and evolving through experience. Systems like ChatGPT by OpenAI, BERT, and T5 have enabled breakthroughs in human-AI communication.

Let's personalize your content