An End-to-End Guide on Google’s BERT

Analytics Vidhya

DECEMBER 13, 2021

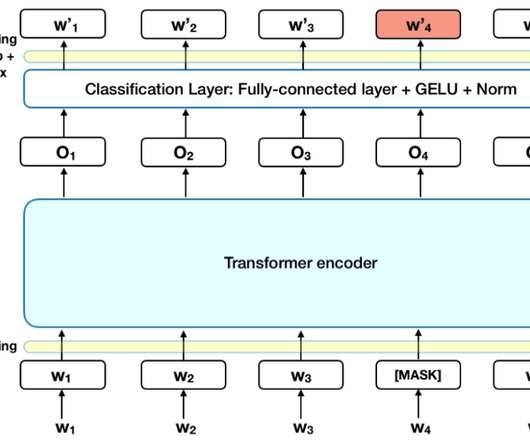

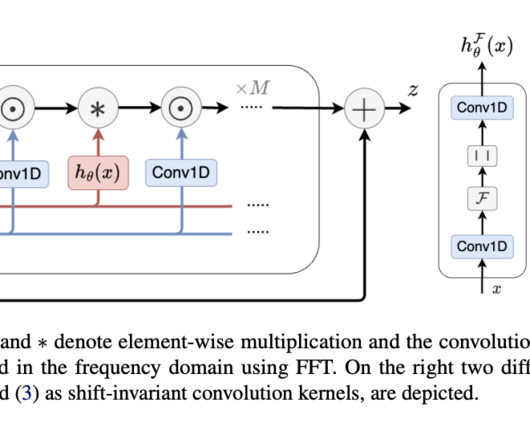

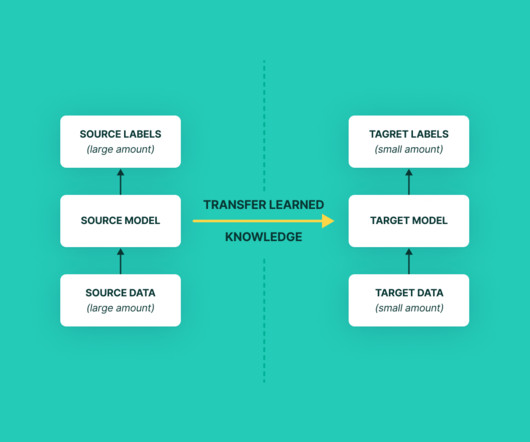

This article was published as a part of the Data Science Blogathon Introduction In the past few years, Natural language processing has evolved a lot using deep neural networks. Many state-of-the-art models are built on deep neural networks. It […].

Let's personalize your content