Spark NLP 5.0: It’s All About That Search!

John Snow Labs

JULY 5, 2023

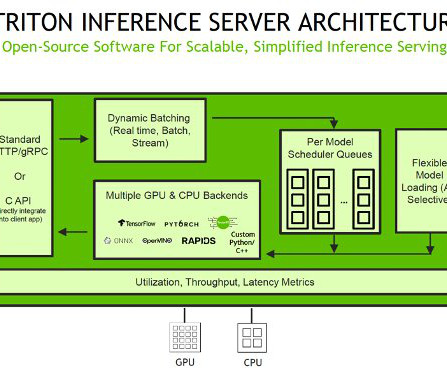

We are delighted to announce the release of Spark NLP 5.0, We are delighted to announce the release of Spark NLP 5.0, Additionally, we are also set to release an array of new LLM models fine-tuned specifically for chat and instruction, now that we have successfully integrated ONNX Runtime into Spark NLP.

Let's personalize your content