Unveiling the Future of Text Analysis: Trendy Topic Modeling with BERT

Analytics Vidhya

JULY 27, 2023

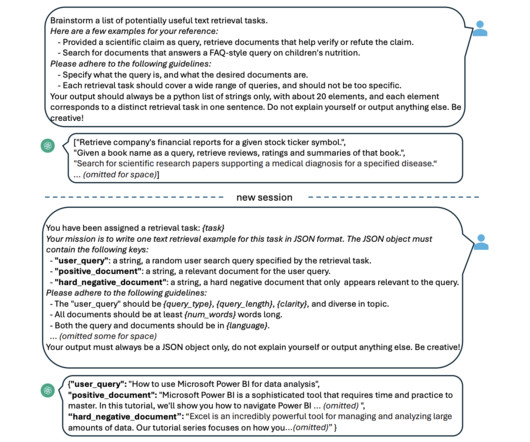

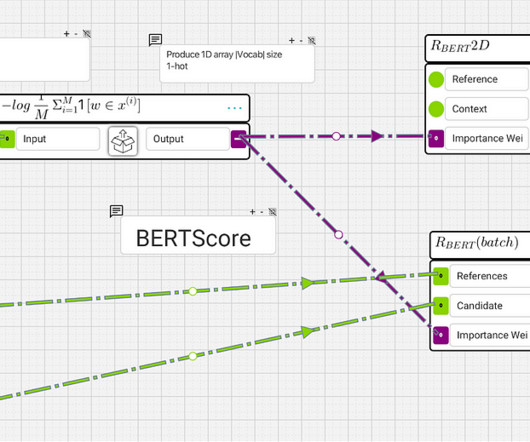

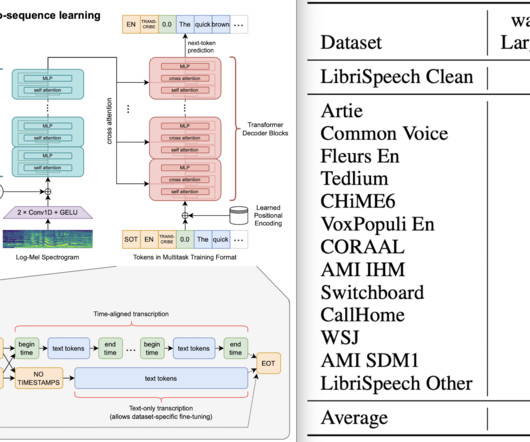

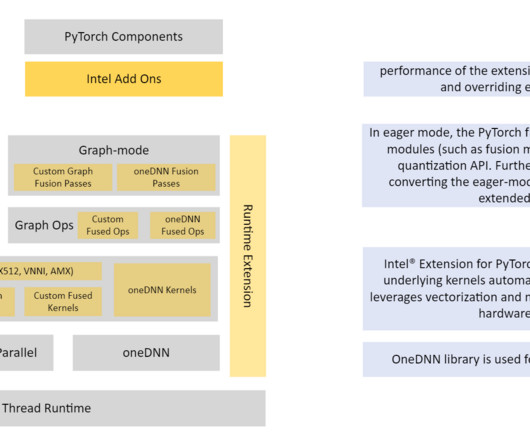

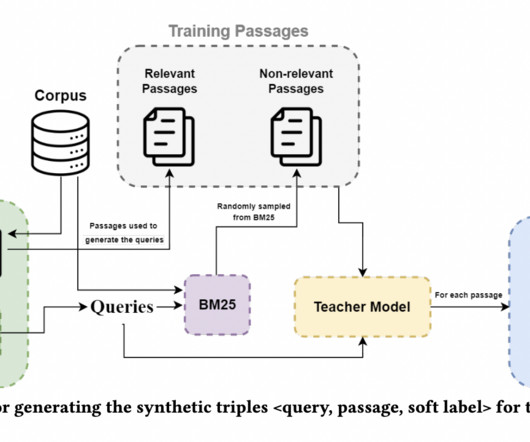

A corpus of text is an example of a collection of documents. This method highlights the underlying structure of a body of text, bringing to light themes and patterns that might […] The post Unveiling the Future of Text Analysis: Trendy Topic Modeling with BERT appeared first on Analytics Vidhya.

Let's personalize your content