Streamline diarization using AI as an assistive technology: ZOO Digital’s story

AWS Machine Learning Blog

FEBRUARY 20, 2024

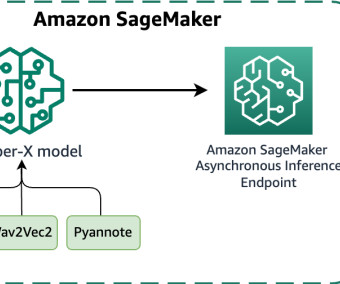

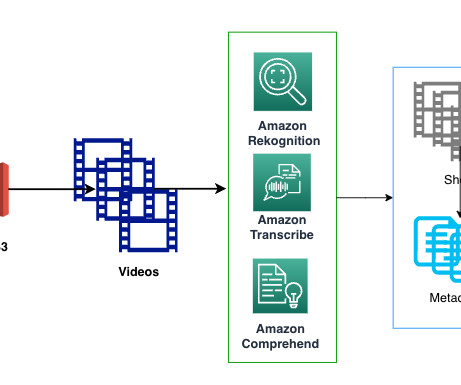

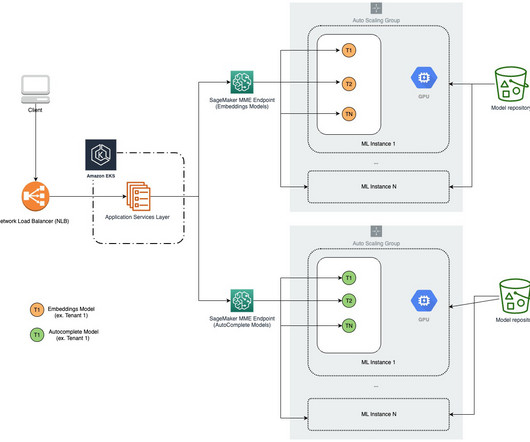

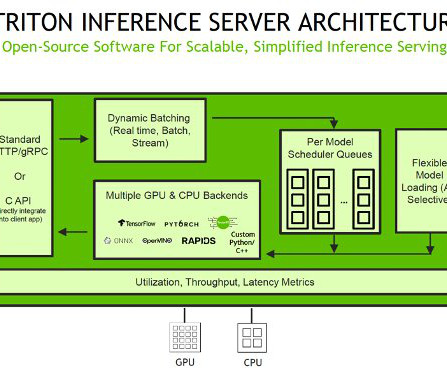

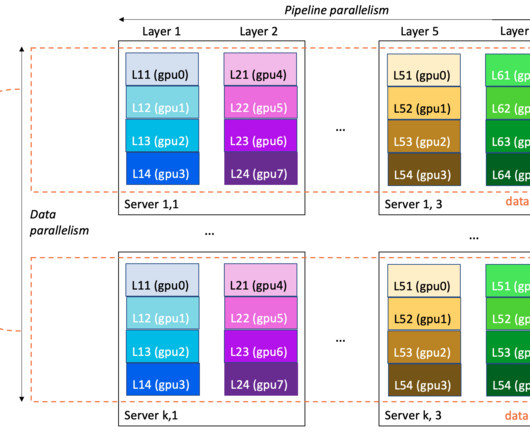

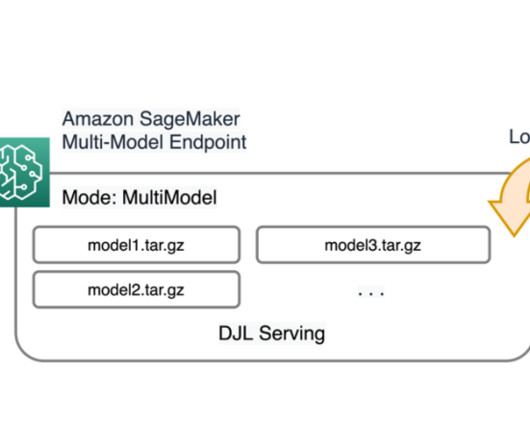

This time-consuming process must be completed before content can be dubbed into another language. SageMaker asynchronous endpoints support upload sizes up to 1 GB and incorporate auto scaling features that efficiently mitigate traffic spikes and save costs during off-peak times. in a code subdirectory. in a code subdirectory.

Let's personalize your content