How Vericast optimized feature engineering using Amazon SageMaker Processing

AWS Machine Learning Blog

MAY 3, 2023

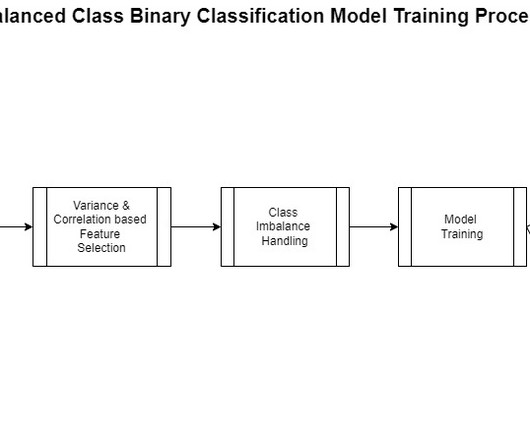

Furthermore, the dynamic nature of a customer’s data can also result in a large variance of the processing time and resources required to optimally complete the feature engineering. Most of this process is the same for any binary classification except for the feature engineering step. The SageMaker Processing job is now started.

Let's personalize your content