Use custom metadata created by Amazon Comprehend to intelligently process insurance claims using Amazon Kendra

AWS Machine Learning Blog

DECEMBER 5, 2023

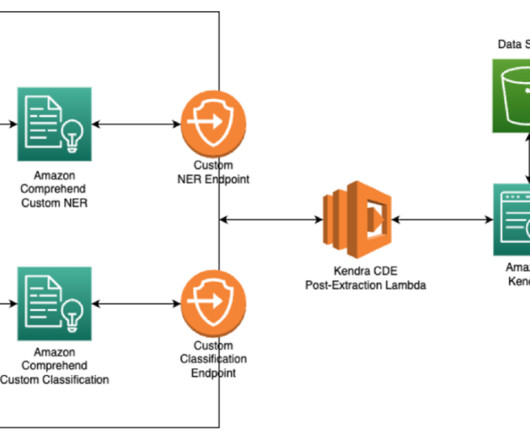

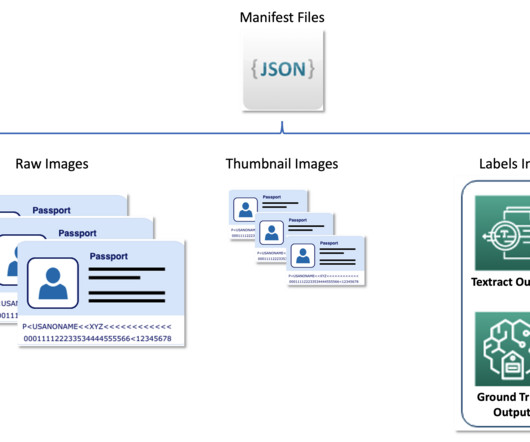

Enterprises may want to add custom metadata like document types (W-2 forms or paystubs), various entity types such as names, organization, and address, in addition to the standard metadata like file type, date created, or size to extend the intelligent search while ingesting the documents.

Let's personalize your content