Boost employee productivity with automated meeting summaries using Amazon Transcribe, Amazon SageMaker, and LLMs from Hugging Face

AWS Machine Learning Blog

MAY 7, 2024

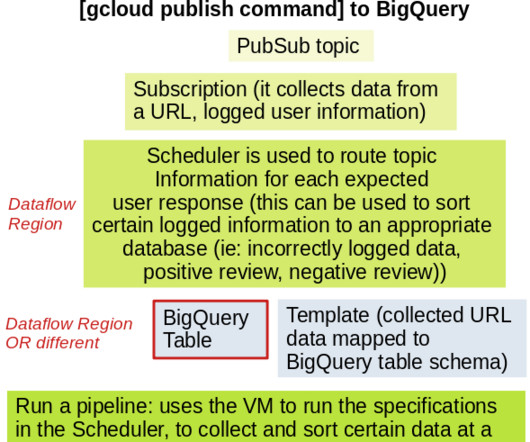

The service allows for simple audio data ingestion, easy-to-read transcript creation, and accuracy improvement through custom vocabularies. They are designed for real-time, interactive, and low-latency workloads and provide auto scaling to manage load fluctuations. The format of the recordings must be either.mp4,mp3, or.wav.

Let's personalize your content