Build a news recommender application with Amazon Personalize

AWS Machine Learning Blog

APRIL 4, 2024

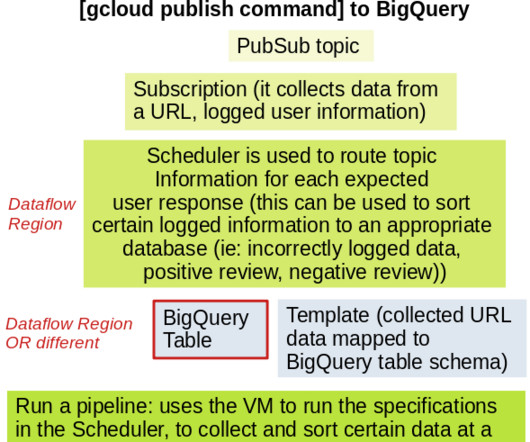

Explainability – Providing transparency into why certain stories are recommended builds user trust. AWS Glue performs extract, transform, and load (ETL) operations to align the data with the Amazon Personalize datasets schema. We discuss more about how to use items and interactions data attributes in DynamoDB later in this post.

Let's personalize your content