Amazon EC2 DL2q instance for cost-efficient, high-performance AI inference is now generally available

AWS Machine Learning Blog

NOVEMBER 22, 2023

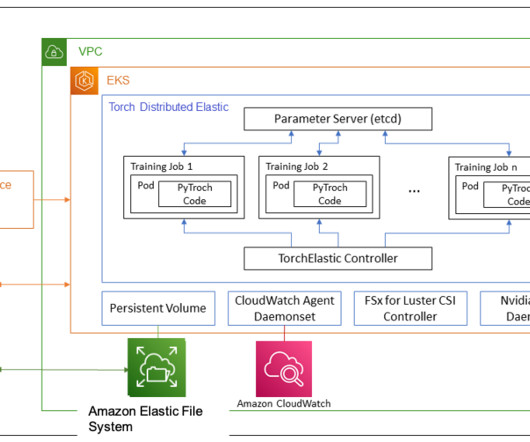

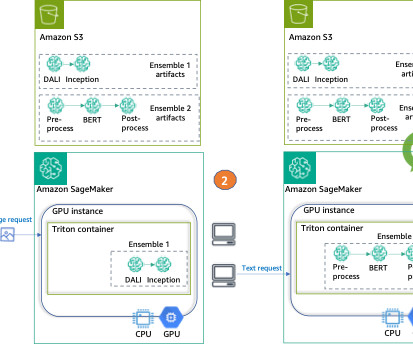

Amazon Elastic Compute Cloud (Amazon EC2) DL2q instances, powered by Qualcomm AI 100 Standard accelerators, can be used to cost-efficiently deploy deep learning (DL) workloads in the cloud. To learn more about tuning the performance of a model, see the Cloud AI 100 Key Performance Parameters Documentation. Roy from Qualcomm AI.

Let's personalize your content