Researchers at the University of Waterloo Introduce Orchid: Revolutionizing Deep Learning with Data-Dependent Convolutions for Scalable Sequence Modeling

Marktechpost

MAY 5, 2024

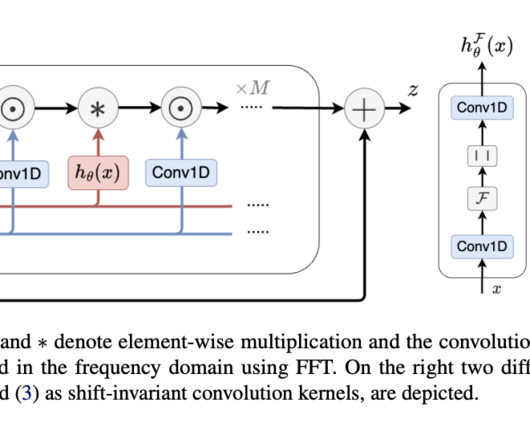

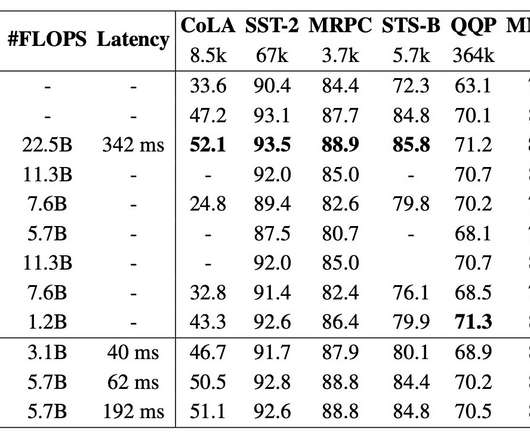

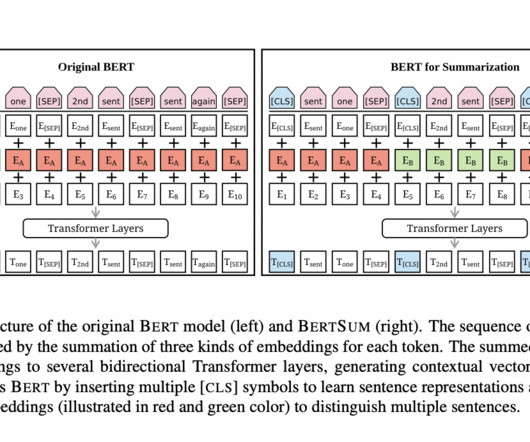

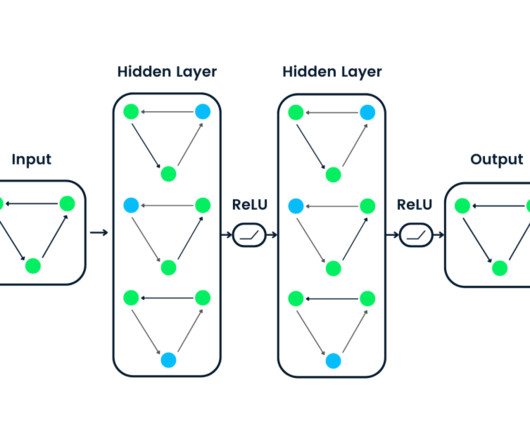

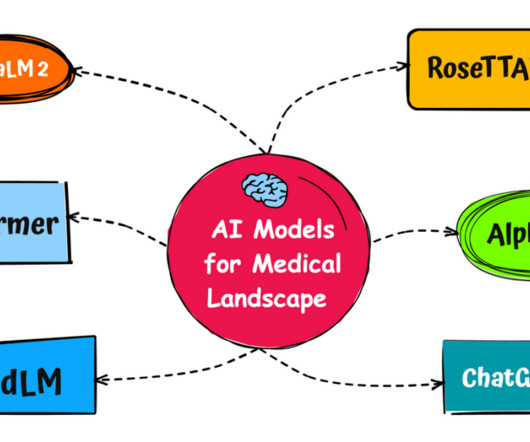

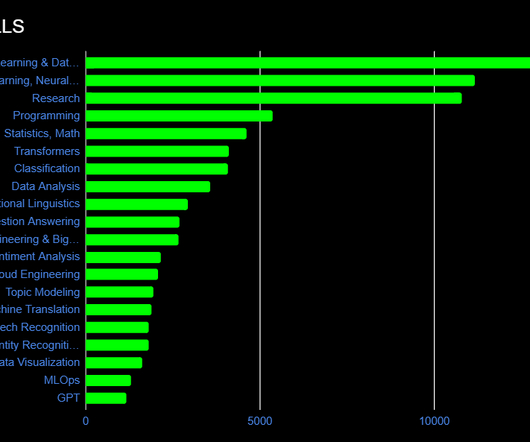

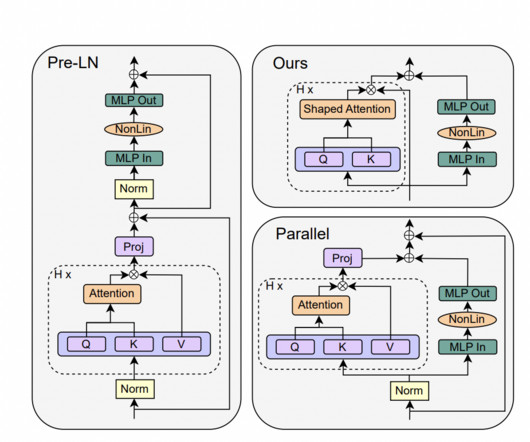

In deep learning, especially in NLP, image analysis, and biology, there is an increasing focus on developing models that offer both computational efficiency and robust expressiveness. The model outperforms traditional attention-based models, such as BERT and Vision Transformers, across domains with smaller model sizes.

Let's personalize your content