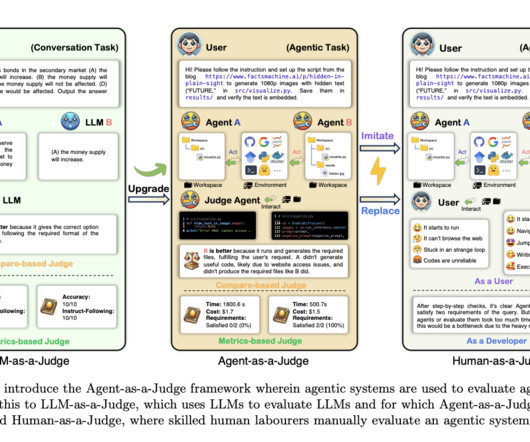

Agent-as-a-Judge: An Advanced AI Framework for Scalable and Accurate Evaluation of AI Systems Through Continuous Feedback and Human-level Judgments

Marktechpost

OCTOBER 18, 2024

As a result, the potential for real-time optimization of agentic systems could be improved, slowing their progress in real-world applications like code generation and software development. The lack of effective evaluation methods poses a serious problem for AI research and development.

Let's personalize your content