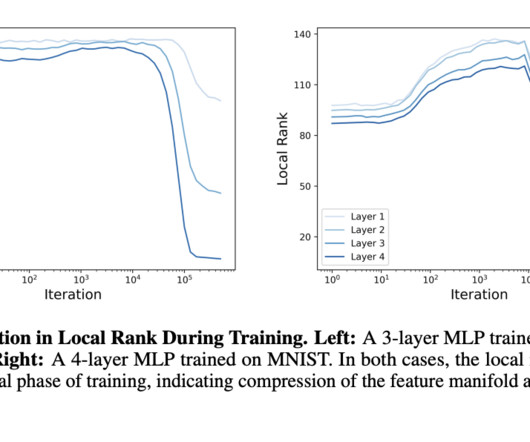

Understanding Local Rank and Information Compression in Deep Neural Networks

Marktechpost

OCTOBER 18, 2024

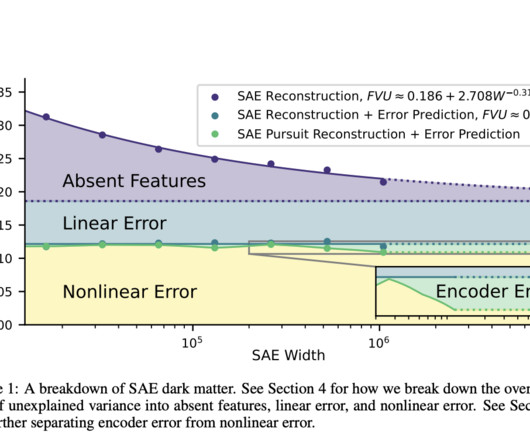

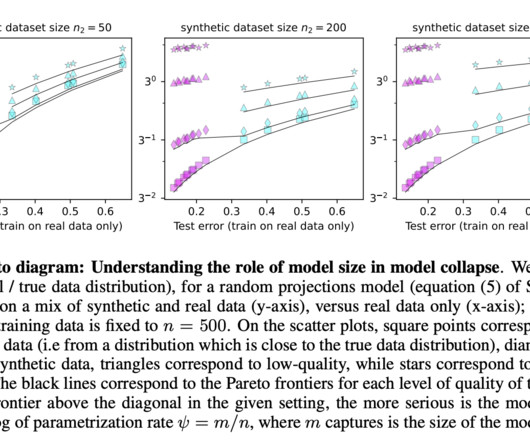

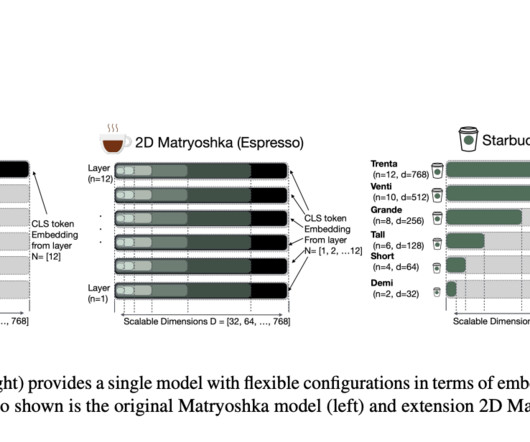

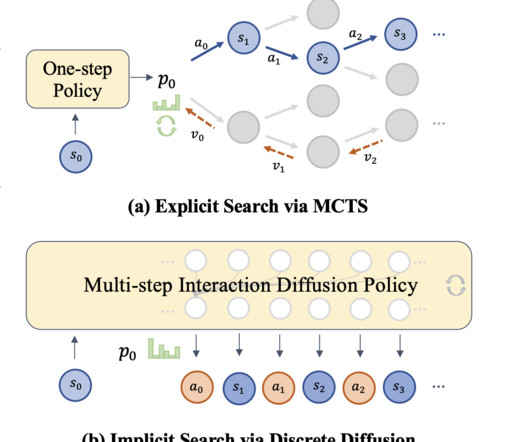

Deep neural networks are powerful tools that excel in learning complex patterns, but understanding how they efficiently compress input data into meaningful representations remains a challenging research problem. The paper presents both theoretical analysis and empirical evidence demonstrating this phenomenon.

Let's personalize your content