The Best Inference APIs for Open LLMs to Enhance Your AI App

Unite.AI

DECEMBER 12, 2024

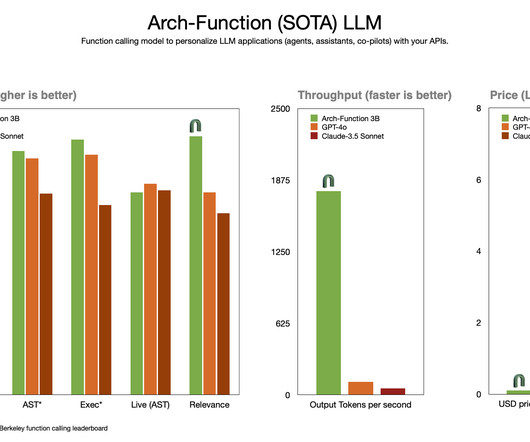

Groq groq Groq is renowned for its high-performance AI inference technology. Their standout product, the Language Processing Units (LPU) Inference Engine , combines specialized hardware and optimized software to deliver exceptional compute speed, quality, and energy efficiency. per million tokens.

Let's personalize your content