Google Research, 2022 & beyond: Algorithms for efficient deep learning

Google Research AI blog

FEBRUARY 7, 2023

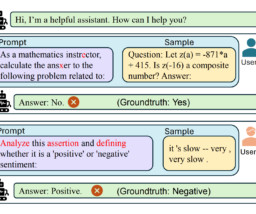

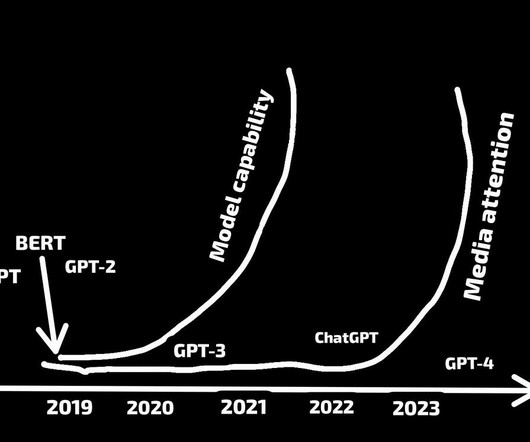

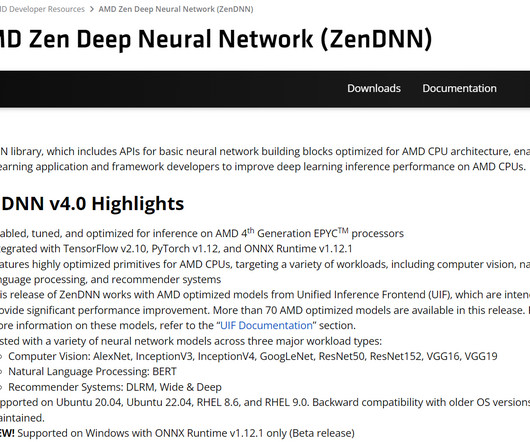

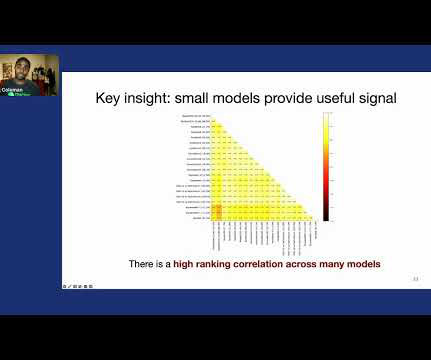

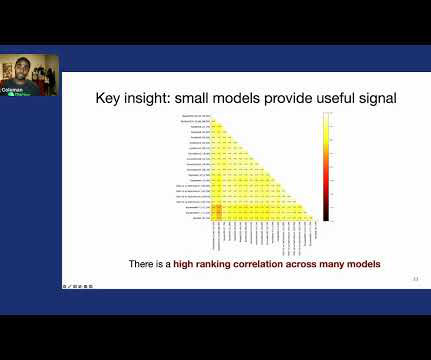

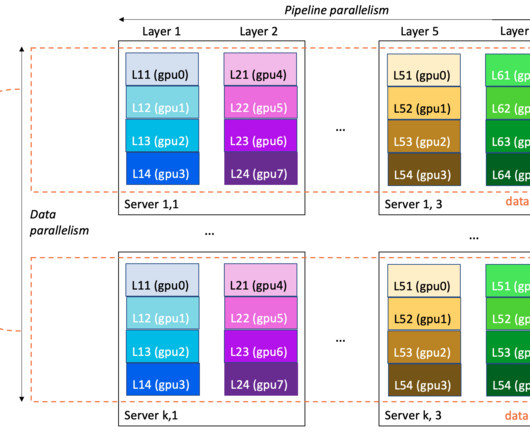

The explosion in deep learning a decade ago was catapulted in part by the convergence of new algorithms and architectures, a marked increase in data, and access to greater compute. Using this approach, for the first time, we were able to effectively train BERT using simple SGD without the need for adaptivity.

Let's personalize your content