Google Research, 2022 & beyond: Algorithms for efficient deep learning

Google Research AI blog

FEBRUARY 7, 2023

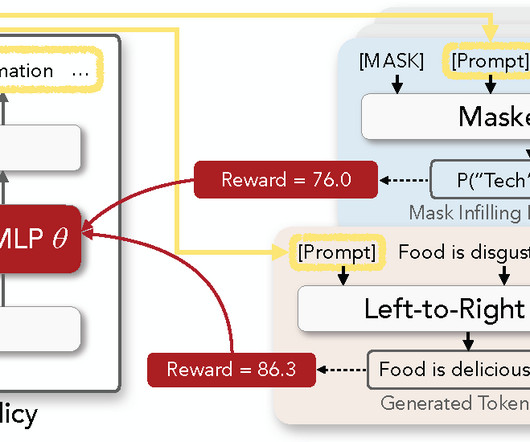

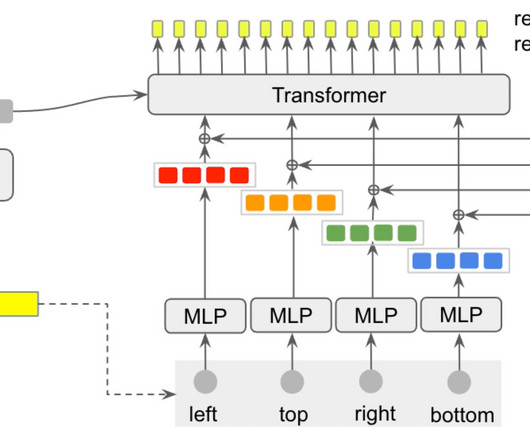

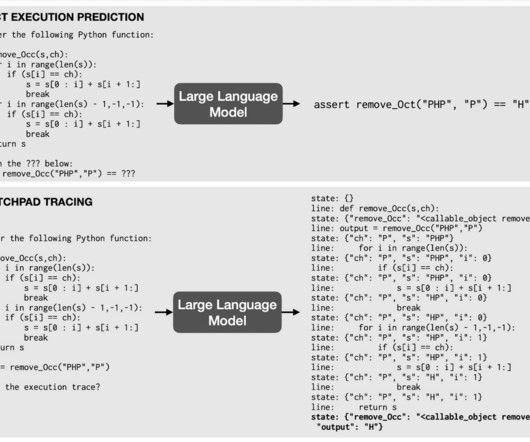

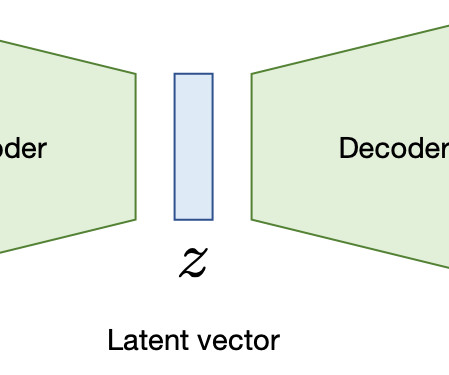

In 2022, we focused on new techniques for infusing external knowledge by augmenting models via retrieved context; mixture of experts; and making transformers (which lie at the heart of most large ML models) more efficient. Google Research, 2022 & beyond This was the fourth blog post in the “Google Research, 2022 & Beyond” series.

Let's personalize your content