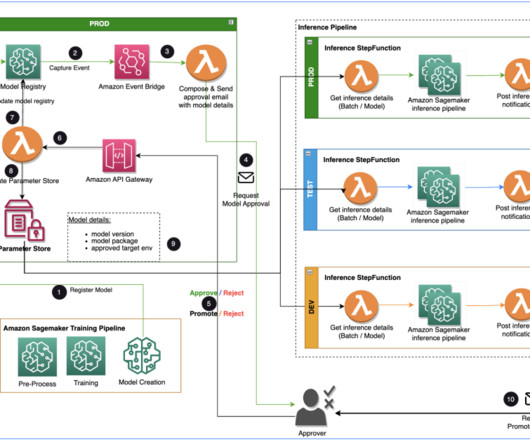

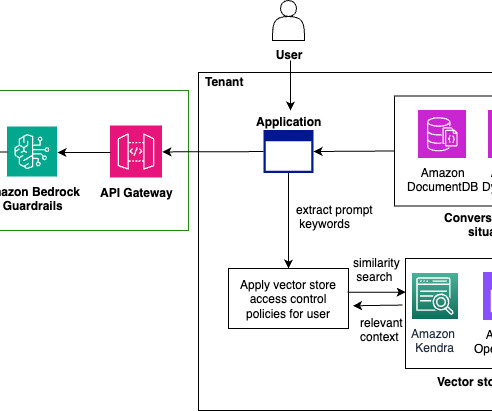

Integrate SaaS platforms with Amazon SageMaker to enable ML-powered applications

AWS Machine Learning Blog

JULY 6, 2023

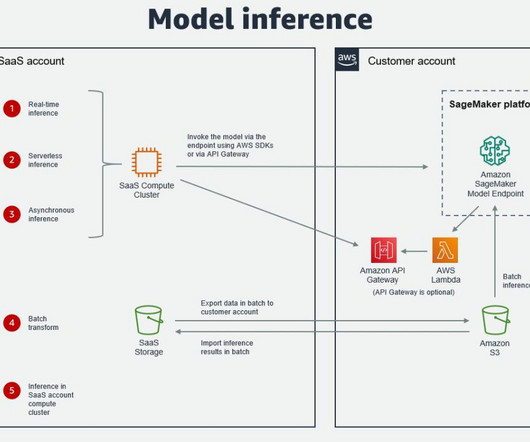

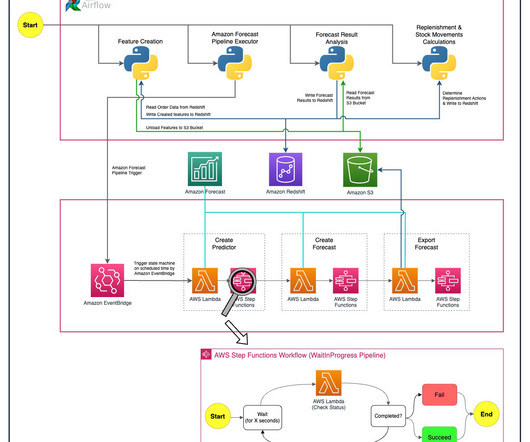

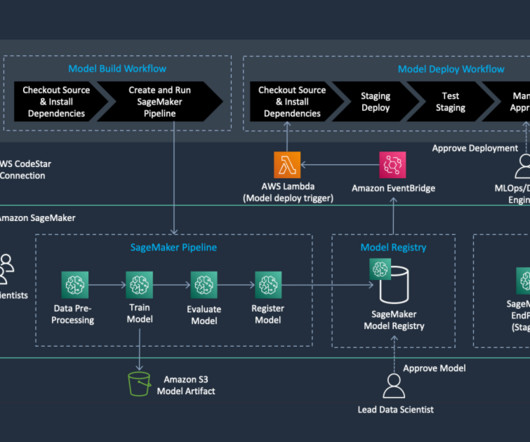

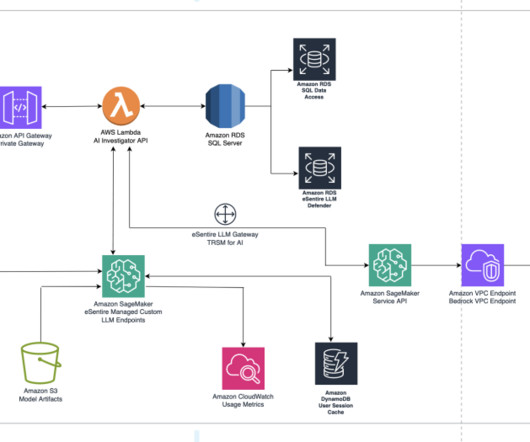

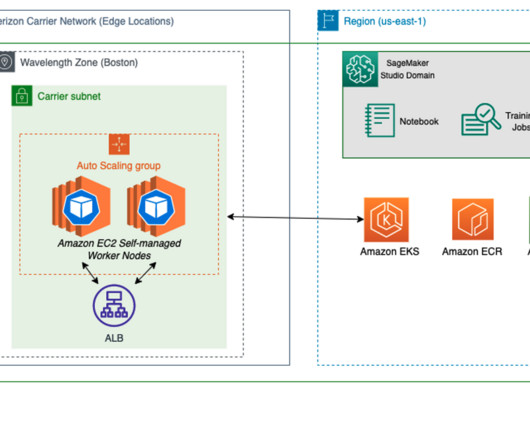

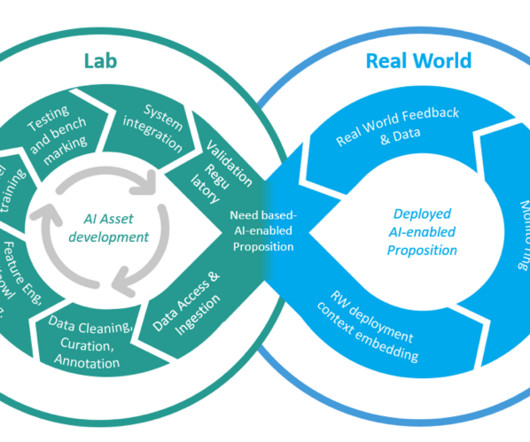

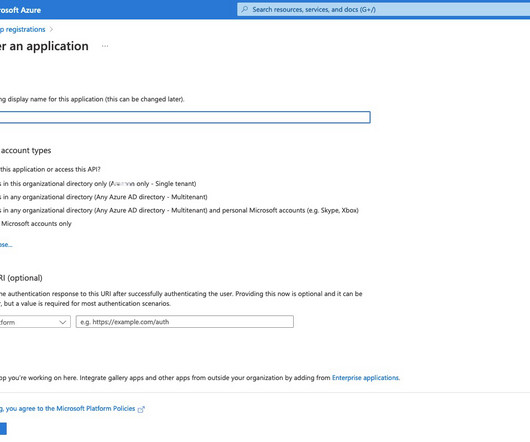

Many organizations choose SageMaker as their ML platform because it provides a common set of tools for developers and data scientists. This is usually in a dedicated customer AWS account, meaning there still needs to be cross-account access to the customer AWS account where SageMaker is running.

Let's personalize your content