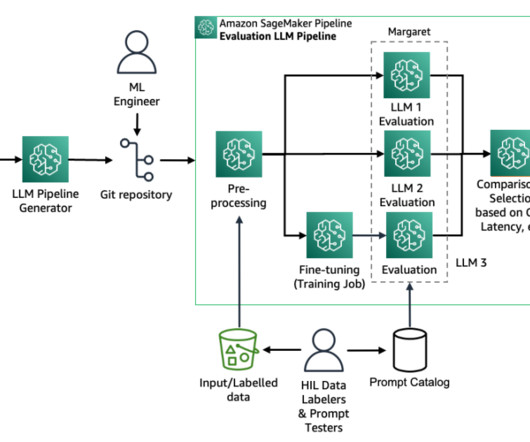

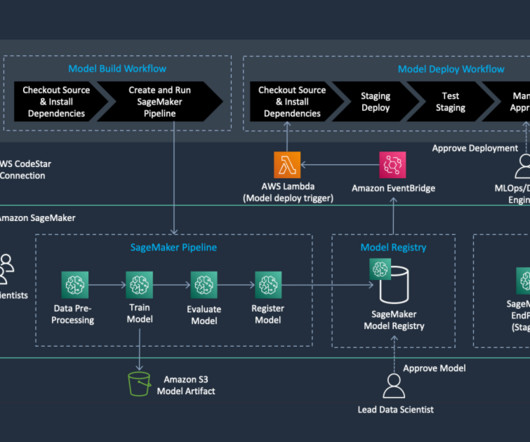

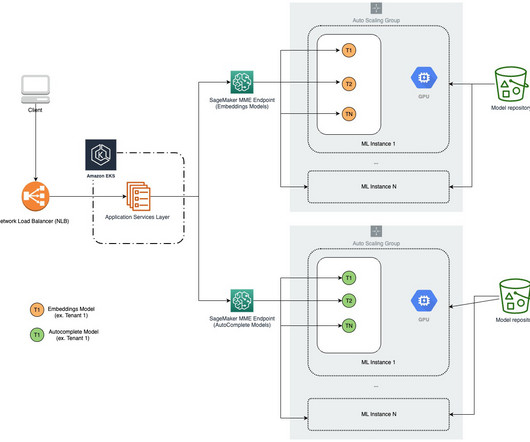

Governing the ML lifecycle at scale, Part 1: A framework for architecting ML workloads using Amazon SageMaker

AWS Machine Learning Blog

OCTOBER 20, 2023

Customers of every size and industry are innovating on AWS by infusing machine learning (ML) into their products and services. Recent developments in generative AI models have further sped up the need of ML adoption across industries.

Let's personalize your content