LlamaIndex: Augment your LLM Applications with Custom Data Easily

Unite.AI

OCTOBER 25, 2023

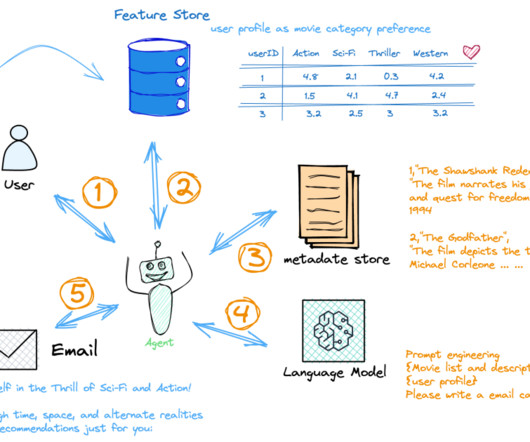

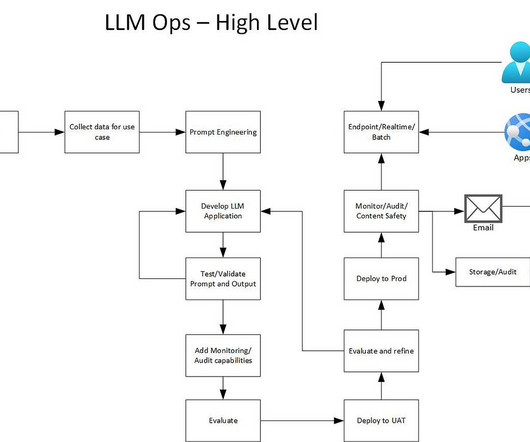

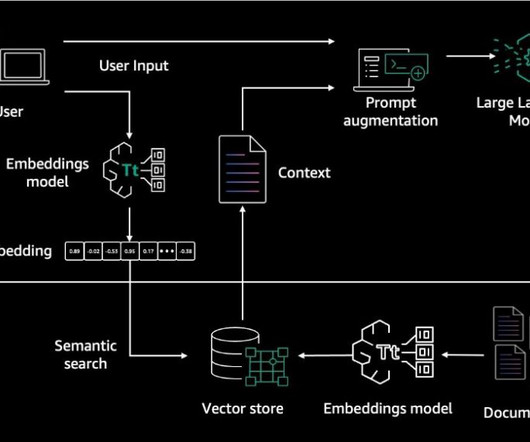

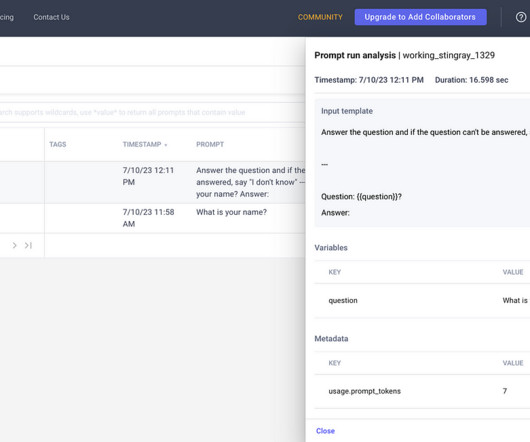

In-context learning has emerged as an alternative, prioritizing the crafting of inputs and prompts to provide the LLM with the necessary context for generating accurate outputs. But the drawback for this is its reliance on the skill and expertise of the user in prompt engineering.

Let's personalize your content