LlamaIndex: Augment your LLM Applications with Custom Data Easily

Unite.AI

OCTOBER 25, 2023

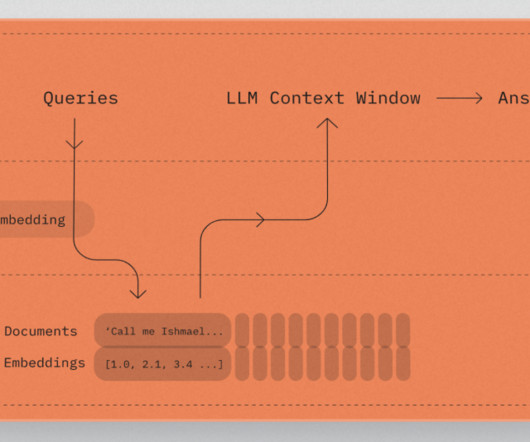

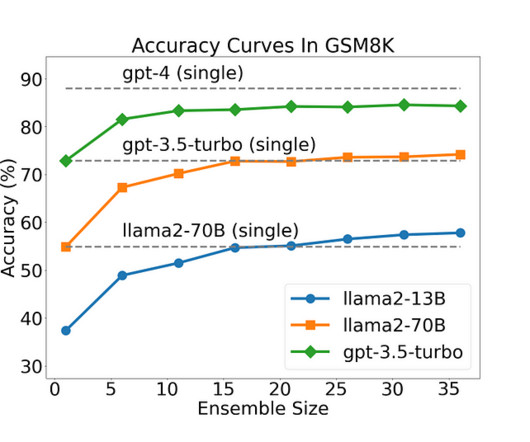

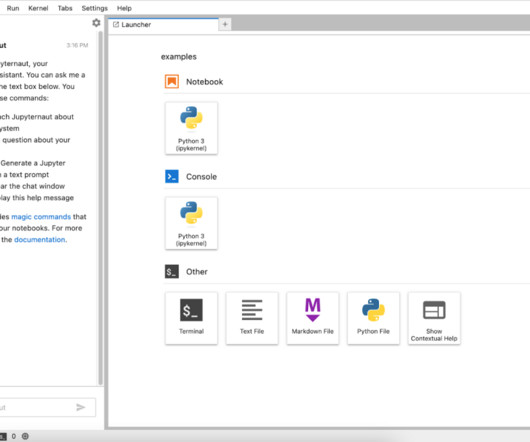

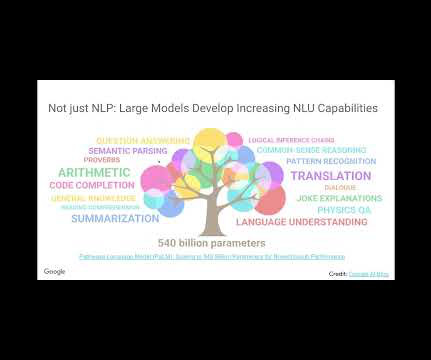

Large language models (LLMs) like OpenAI's GPT series have been trained on a diverse range of publicly accessible data, demonstrating remarkable capabilities in text generation, summarization, question answering, and planning. OpenAI Setup : By default, LlamaIndex utilizes OpenAI's gpt-3.5-turbo

Let's personalize your content