Logging YOLOPandas with Comet-LLM

Heartbeat

JANUARY 19, 2024

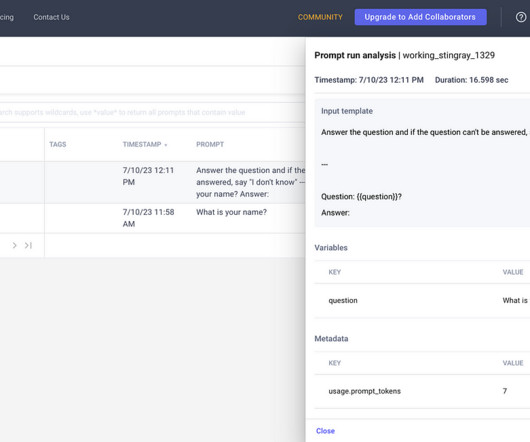

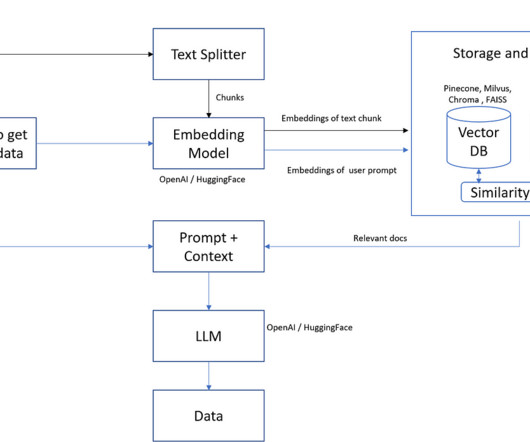

As prompt engineering is fundamentally different from training machine learning models, Comet has released a new SDK tailored for this use case comet-llm. In this article you will learn how to log the YOLOPandas prompts with comet-llm, keep track of the number of tokens used in USD($), and log your metadata.

Let's personalize your content