Enhancing AI Transparency and Trust with Composite AI

Unite.AI

APRIL 9, 2024

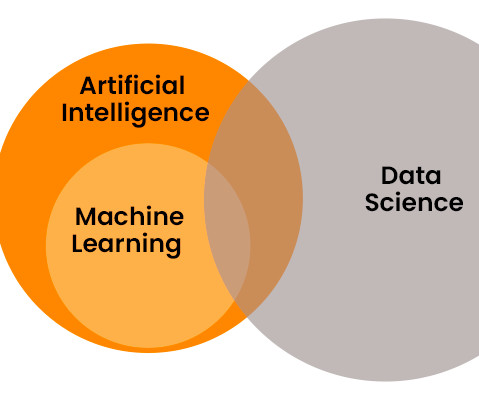

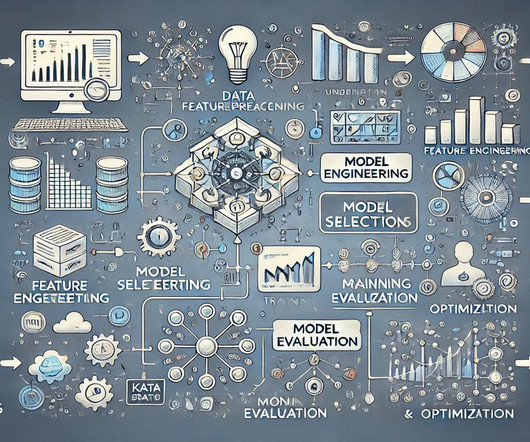

Composite AI is a cutting-edge approach to holistically tackling complex business problems. These techniques include Machine Learning (ML), deep learning , Natural Language Processing (NLP) , Computer Vision (CV) , descriptive statistics, and knowledge graphs. Decision trees and rule-based models like CART and C4.5

Let's personalize your content