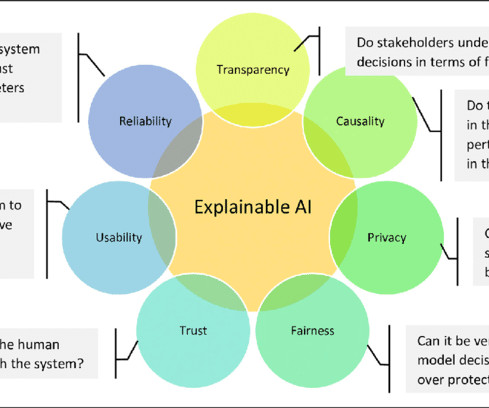

Top 10 Explainable AI (XAI) Frameworks

Marktechpost

APRIL 24, 2024

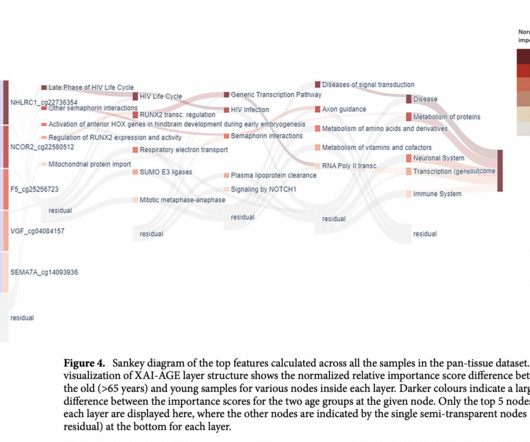

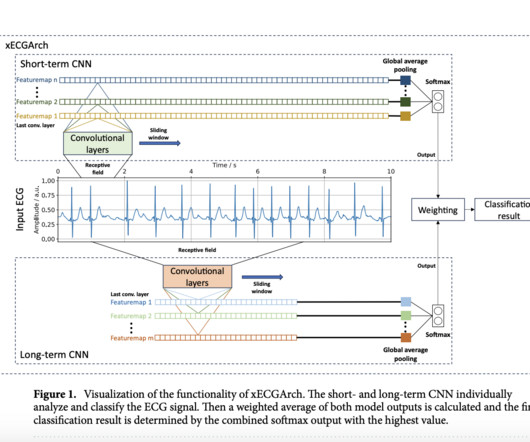

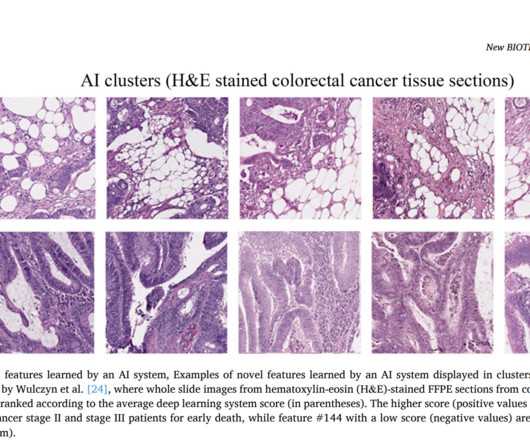

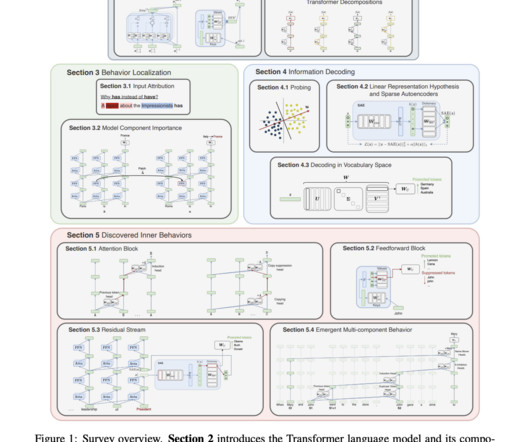

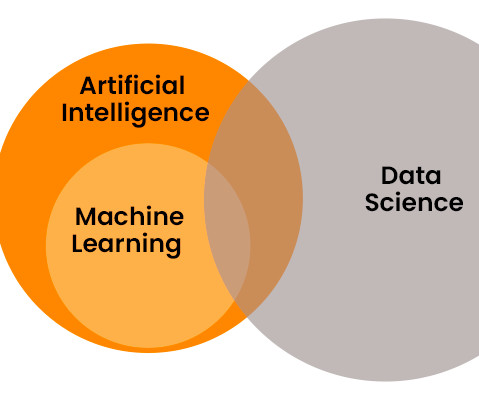

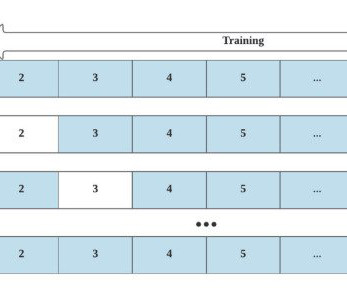

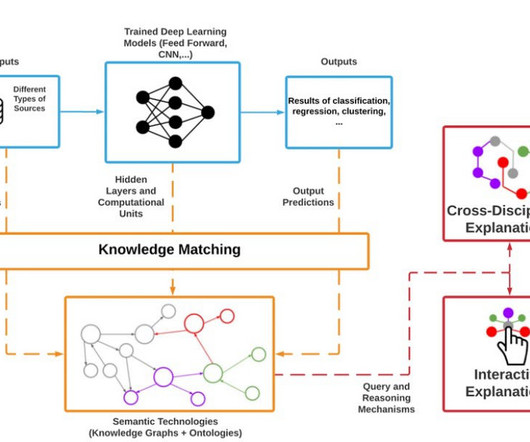

The increasing complexity of AI systems, particularly with the rise of opaque models like Deep Neural Networks (DNNs), has highlighted the need for transparency in decision-making processes. Moreover, it can compute these contribution scores efficiently in just one backward pass through the network.

Let's personalize your content