Build an image search engine with Amazon Kendra and Amazon Rekognition

AWS Machine Learning Blog

MAY 5, 2023

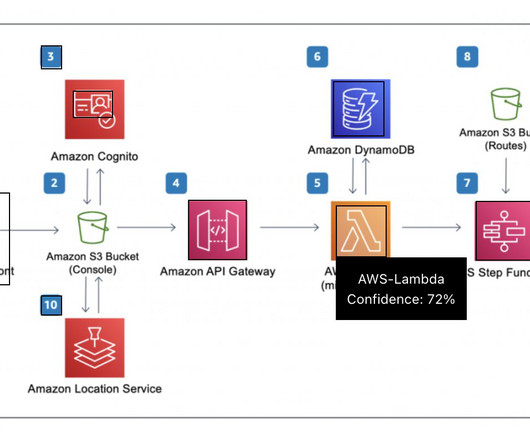

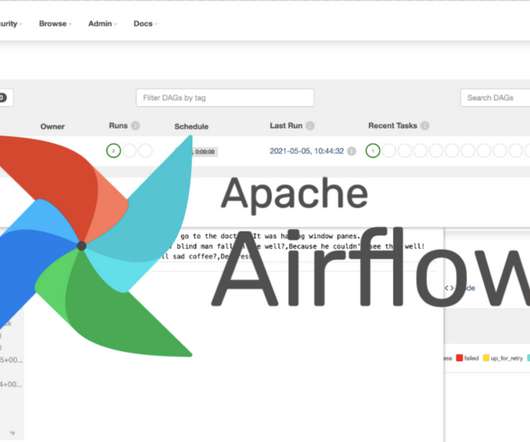

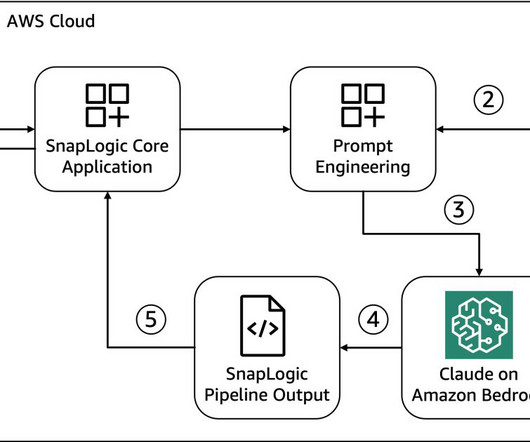

The following figure shows an example diagram that illustrates an orchestrated extract, transform, and load (ETL) architecture solution. For example, searching for the terms “How to orchestrate ETL pipeline” returns results of architecture diagrams built with AWS Glue and AWS Step Functions. join(", "), }; }).catch((error)

Let's personalize your content