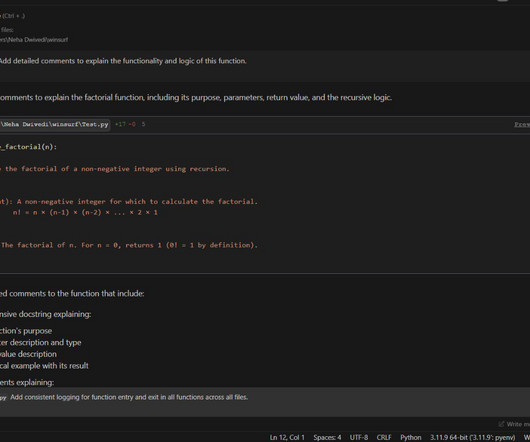

Windsurf Editor: Revolutionizing Coding with AI-Powered Intelligence

Analytics Vidhya

NOVEMBER 23, 2024

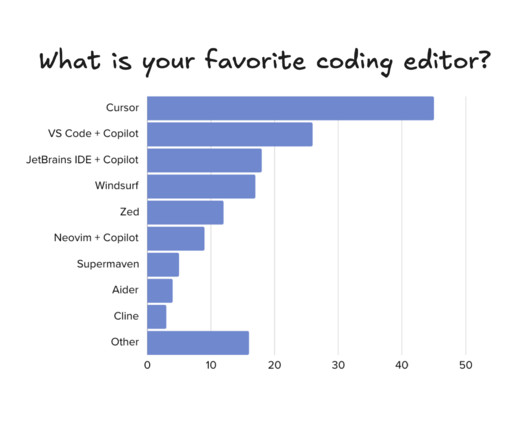

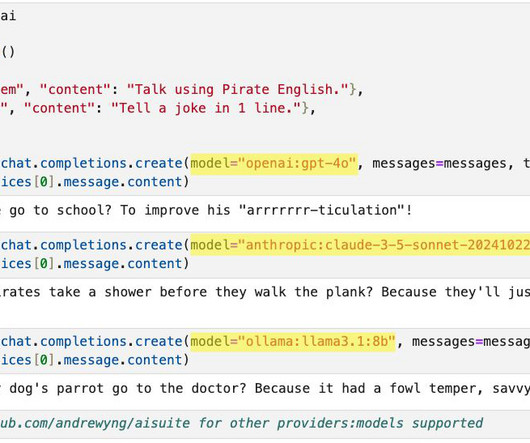

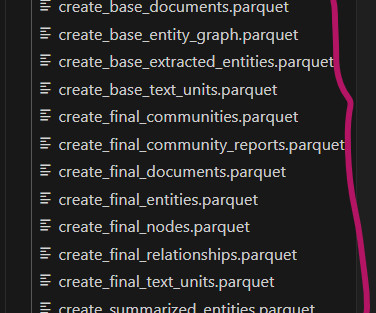

In the dynamic world of software development, where innovation is the cornerstone of success, developers constantly seek tools that can enhance their productivity and streamline their workflows. Enter Windsurf Editor by Codeium, a revolutionary platform that redefines the coding experience by integrating the power of artificial intelligence (AI). The tech world is witnessing an extraordinary […] The post Windsurf Editor: Revolutionizing Coding with AI-Powered Intelligence appeared first on

Let's personalize your content