How Deltek uses Amazon Bedrock for question and answering on government solicitation documents

AWS Machine Learning Blog

AUGUST 9, 2024

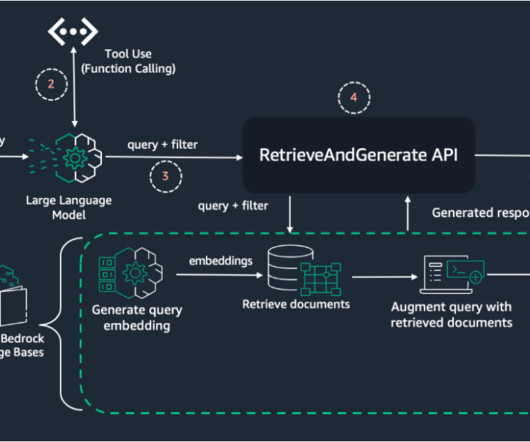

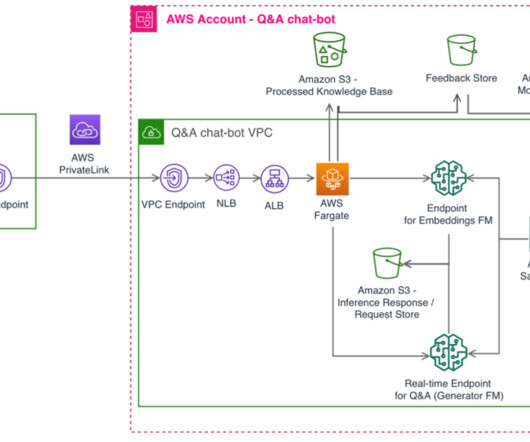

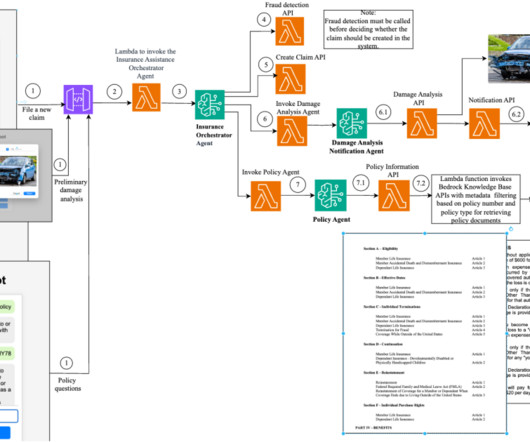

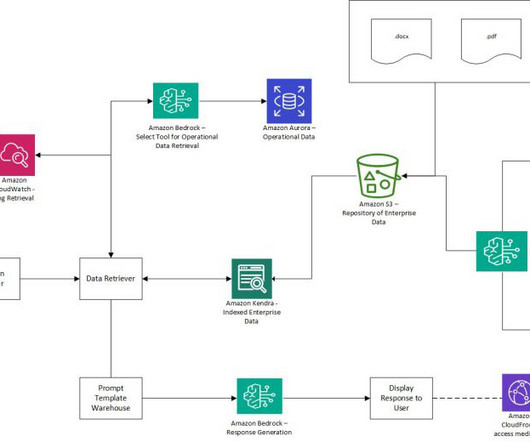

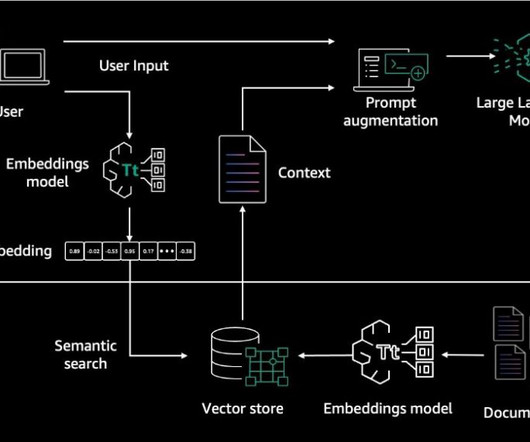

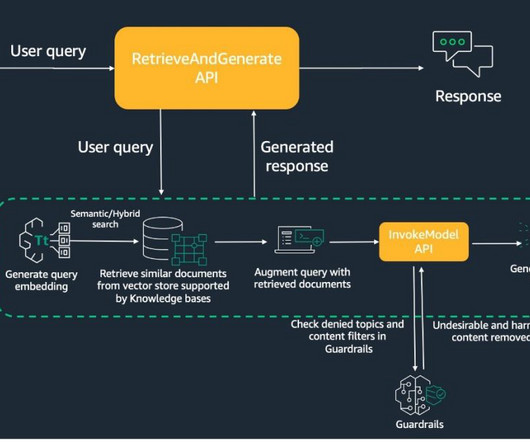

Question and answering (Q&A) using documents is a commonly used application in various use cases like customer support chatbots, legal research assistants, and healthcare advisors. In this collaboration, the AWS GenAIIC team created a RAG-based solution for Deltek to enable Q&A on single and multiple government solicitation documents.

Let's personalize your content