Introduction to Embedchain – A Data Platform Tailored for LLMs

Analytics Vidhya

NOVEMBER 5, 2023

Introduction The introduction to tools like LangChain, and LangFlow, has made things easier when building applications with Large Language Models.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Analytics Vidhya

NOVEMBER 5, 2023

Introduction The introduction to tools like LangChain, and LangFlow, has made things easier when building applications with Large Language Models.

IBM Journey to AI blog

SEPTEMBER 27, 2023

Large language models (LLMs) are foundation models that use artificial intelligence (AI), deep learning and massive data sets, including websites, articles and books, to generate text, translate between languages and write many types of content. The license may restrict how the LLM can be used.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

How to Achieve High-Accuracy Results When Using LLMs

Maximizing Profit and Productivity: The New Era of AI-Powered Accounting

Relevance, Reach, Revenue: How to Turn Marketing Trends From Hype to High-Impact

Automation, Evolved: Your New Playbook For Smarter Knowledge Work

Snorkel AI

JUNE 12, 2023

In 2007, Google researchers published a paper on a class of statistical language models they dubbed “large language models”, which they reported as achieving a new state of the art in performance. They used a very standard model and a decoding algorithm so simple they named it “Stupid Backoff” 1.

Snorkel AI

JUNE 12, 2023

In 2007, Google researchers published a paper on a class of statistical language models they dubbed “large language models”, which they reported as achieving a new state of the art in performance. They used a very standard model and a decoding algorithm so simple they named it “Stupid Backoff” 1.

IBM Journey to AI blog

SEPTEMBER 21, 2023

Generative AI and large language models are poised to impact how we all access and use information. is the first enterprise-grade LLM offering that brings trustworthy, scalable, and transparent AI that can be delivered on timelines and maintained at costs that align with industry leading return on investment (ROI).”

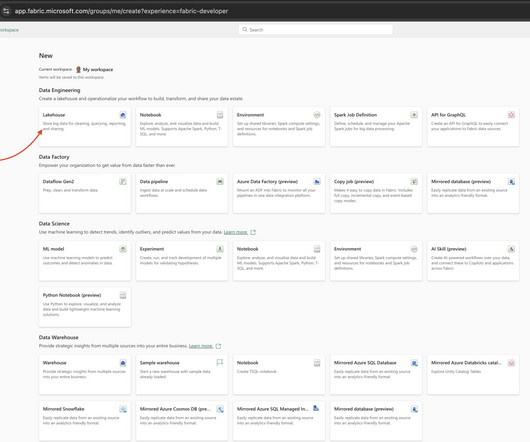

John Snow Labs

FEBRUARY 12, 2025

John Snow Labs’ Medical Language Models library is an excellent choice for leveraging the power of large language models (LLM) and natural language processing (NLP) in Azure Fabric due to its seamless integration, scalability, and state-of-the-art accuracy on medical tasks.

IBM Journey to AI blog

APRIL 24, 2024

Large language models (LLMs) may be the biggest technological breakthrough of the decade. Prompt injections are a type of attack where hackers disguise malicious content as benign user input and feed it to an LLM application. Hackers do not need to feed prompts directly to LLMs for these attacks to work.

IBM Journey to AI blog

AUGUST 31, 2023

IBM watsonx Assistant connects to watsonx, IBM’s enterprise-ready AI and data platform for training, deploying and managing foundation models, to enable business users to automate accurate, conversational question-answering with customized watsonx large language models.

IBM Journey to AI blog

AUGUST 7, 2024

In the year since we unveiled IBM’s enterprise generative AI (gen AI) and data platform, we’ve collaborated with numerous software companies to embed IBM watsonx™ into their apps, offerings and solutions. IBM’s established expertise and industry trust make it an ideal integration partner.”

JUNE 6, 2023

Cloudera got its start in the Big Data era and is now moving quickly into the era of Big AI with large language models (LLMs). Today, Cloudera announced its strategy and tools for helping enterprises integrate the power of LLMs and generative AI into the company’s Cloudera Data Platform (CDP). …

IBM Journey to AI blog

JUNE 19, 2024

Thankfully, retrieval-augmented generation (RAG) has emerged as a promising solution to ground large language models (LLMs) on the most accurate, up-to-date information. IBM unveiled its new AI and data platform, watsonx™, which offers RAG, back in May 2023.

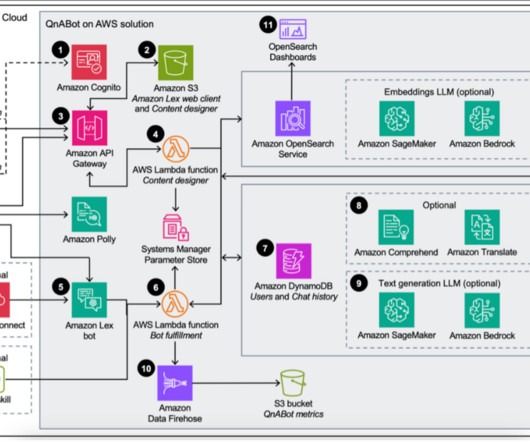

AWS Machine Learning Blog

APRIL 1, 2025

Understanding Constitutional AI Constitutional AI is designed to align large language models (LLMs) with human values and ethical considerations. It works by integrating a set of predefined rules, principles, and constraints into the LLMs core architecture and training process. with st.spinner(f"Generating."): .

IBM Journey to AI blog

SEPTEMBER 28, 2023

An AI and data platform, such as watsonx, can help empower businesses to leverage foundation models and accelerate the pace of generative AI adoption across their organization. The latest open-source LLM model we added this month includes Meta’s 70 billion parameter model Llama 2-chat inside the watsonx.ai

NOVEMBER 19, 2024

Pre-filtered documents that relate to the user query are included in the prompt of the large language model (LLM) that summarizes the answer. Then, Lambda replies back to the web interface with the LLM completion (reply). He helps customers and partners build big data platform and generative AI applications.

IBM Journey to AI blog

APRIL 9, 2024

This allows the Masters to scale analytics and AI wherever their data resides, through open formats and integration with existing databases and tools. “Hole distances and pin positions vary from round to round and year to year; these factors are important as we stage the data.”

Snorkel AI

JANUARY 8, 2025

In todays fast-paced AI landscape, seamless integration between data platforms and AI development tools is critical. At Snorkel, weve partnered with Databricks to create a powerful synergy between their data lakehouse and our Snorkel Flow AI data development platform.

AWS Machine Learning Blog

MARCH 10, 2025

Recommendation agent Analyzes the aggregated data to provide tailored recommendations for precise input applications, product placement, and strategies for pest and disease control. This experience was instrumental in her professional growth.

TheSequence

MARCH 23, 2025

. 🔎 AI Research Synthetic Data and Differential Privacy In the paper "Private prediction for large-scale synthetic text generation " researchers from Google present an approach for generating differentially private synthetic text using large language models via private prediction. Mistral Small 3.1

NVIDIA

JULY 23, 2024

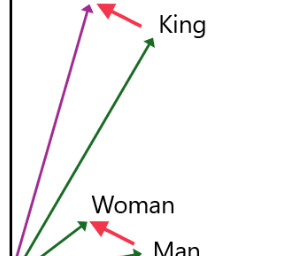

An embedding model transforms diverse data — such as text, images, charts and video — into numerical vectors, stored in a vector database, while capturing their meaning and nuance. Embedding models are fast and computationally less expensive than traditional large language models, or LLMs.

Unite.AI

SEPTEMBER 10, 2024

In his spare time, Eric enjoys playing with ChatGPT and large language models and craft cocktail making. What inspired you to co-found Encord, and how did your experience in particle physics and quantitative finance shape your approach to solving the “data problem” in AI? He holds an S.M.

AWS Machine Learning Blog

MARCH 5, 2025

These assistants can be powered by various backend architectures including Retrieval Augmented Generation (RAG), agentic workflows, fine-tuned large language models (LLMs), or a combination of these techniques. To learn more about FMEval, see Evaluate large language models for quality and responsibility of LLMs.

IBM Journey to AI blog

AUGUST 1, 2023

For example, a foundation model might be used as the basis for a generative AI model that is then fine-tuned with additional manufacturing datasets to assist in the discovery of safer and faster ways to manufacturer a type of product. An open-source model, Google created BERT in 2018.

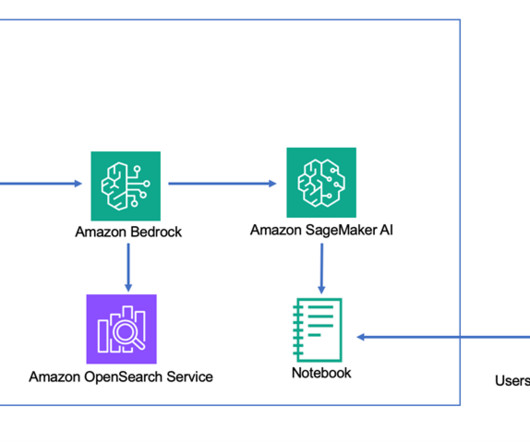

AWS Machine Learning Blog

JULY 29, 2024

In this post, we show how native integrations between Salesforce and Amazon Web Services (AWS) enable you to Bring Your Own Large Language Models (BYO LLMs) from your AWS account to power generative artificial intelligence (AI) applications in Salesforce. For this post, we use the Anthropic Claude 3 Sonnet model.

IBM Journey to AI blog

OCTOBER 14, 2024

Just last month, Salesforce made a major acquisition to power its Agentforce platform—just one in a number of recent investments in unstructured data management providers. “Most data being generated every day is unstructured and presents the biggest new opportunity.”

IBM Journey to AI blog

JULY 17, 2023

Currently chat bots are relying on rule-based systems or traditional machine learning algorithms (or models) to automate tasks and provide predefined responses to customer inquiries. is a studio to train, validate, tune and deploy machine learning (ML) and foundation models for Generative AI. Watsonx.ai

IBM Journey to AI blog

APRIL 18, 2024

AI systems like LaMDA and GPT-3 excel at generating human-quality text, accomplishing specific tasks, translating languages as needed, and creating different kinds of creative content. Achieving these feats is accomplished through a combination of sophisticated algorithms, natural language processing (NLP) and computer science principles.

AWS Machine Learning Blog

JUNE 18, 2024

This post presents a solution that uses a generative artificial intelligence (AI) to standardize air quality data from low-cost sensors in Africa, specifically addressing the air quality data integration problem of low-cost sensors. The solution only invokes the LLM for new device data file type (code has not yet been generated).

John Snow Labs

JANUARY 14, 2025

This is the result of a concentrated effort to deeply integrate its technology across a range of cloud and data platforms, making it easier for customers to adopt and leverage its technology in a private, safe, and scalable way.

AWS Machine Learning Blog

AUGUST 15, 2024

QnABot on AWS provides access to multiple FMs through Amazon Bedrock, so you can create conversational interfaces based on your customers’ language needs (such as Spanish, English, or French), sophistication of questions, and accuracy of responses based on user intent.

ODSC - Open Data Science

FEBRUARY 22, 2024

8 AI Research Labs Pushing the Boundaries of Artificial Intelligence These eight AI research labs are designing the future of the field of data science, ranging from developing new LLMs to using AI to improve quality of life. Here’s a bit more on why that’s the case. Register now for 40% off! Sign up for our new newsletter here!

TheSequence

JUNE 18, 2023

We discuss Google Research’s paper about REALM, the original retrieval-augmented foundation model and the new version of the Ray platform that includes support for LLMs. Edge 302: We deep dive into MPT-7B, an open source LLM that supports 65k tokens.

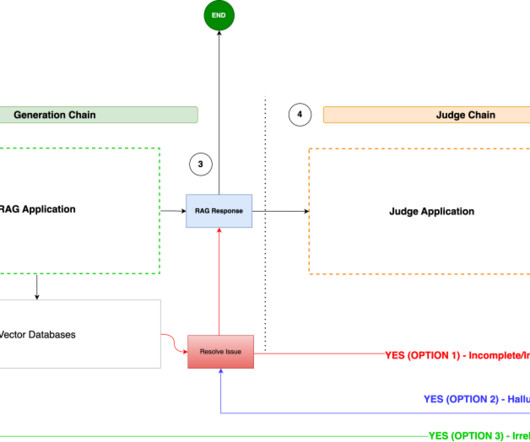

AWS Machine Learning Blog

SEPTEMBER 6, 2024

Generative artificial intelligence (AI) applications powered by large language models (LLMs) are rapidly gaining traction for question answering use cases. To learn more about FMEval, refer to Evaluate large language models for quality and responsibility.

Snorkel AI

JANUARY 8, 2025

In todays fast-paced AI landscape, seamless integration between data platforms and AI development tools is critical. At Snorkel, weve partnered with Databricks to create a powerful synergy between their data lakehouse and our Snorkel Flow AI data development platform.

The MLOps Blog

MARCH 12, 2024

TL;DR LLMOps involves managing the entire lifecycle of Large Language Models (LLMs), including data and prompt management, model fine-tuning and evaluation, pipeline orchestration, and LLM deployment. What is Large Language Model Operations (LLMOps)?

ODSC - Open Data Science

JANUARY 9, 2025

With the advent of Generative AI and Large Language Models (LLMs), we witnessed a paradigm shift in Application development, paving the way for a new wave of LLM-powered applications. We configure the agent asfollows: LLM: we can choose a language model such as GPT-4o, Llama-3, Mistral and manyothers.

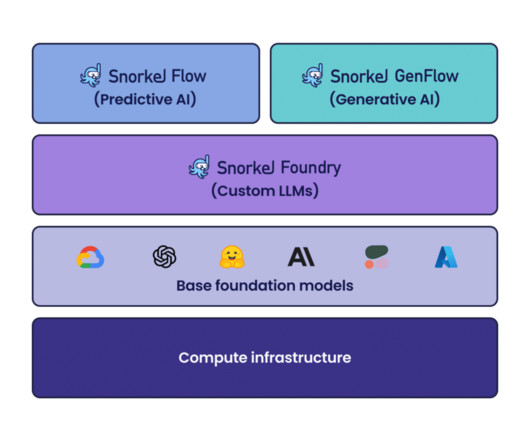

Snorkel AI

JUNE 9, 2023

Snorkel AI wrapped the second day of our The Future of Data-Centric AI virtual conference by showcasing how Snorkel’s data-centric platform has enabled customers to succeed, taking a deep look at Snorkel Flow’s capabilities, and announcing two new solutions. This approach, Zhang said, yields several advantages.

Snorkel AI

JUNE 9, 2023

Snorkel AI wrapped the second day of our The Future of Data-Centric AI virtual conference by showcasing how Snorkel’s data-centric platform has enabled customers to succeed, taking a deep look at Snorkel Flow’s capabilities, and announcing two new solutions. This approach, Zhang said, yields several advantages.

ODSC - Open Data Science

MARCH 8, 2024

Be sure to check out her talk, “ The AI Paradigm Shift: Under the Hood of a Large Language Models ,” there! An implementation with CLIP and GPT-4-vision In recent years, Large Language Models (LLMs) have demonstrated remarkable capabilities across various natural language understanding and generation tasks.

Snorkel AI

JULY 14, 2023

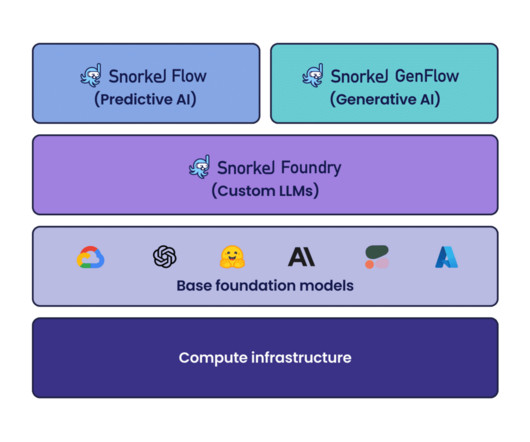

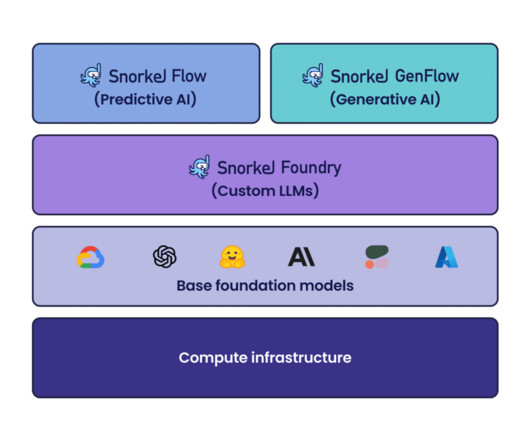

In addition to the latest release of Snorkel Flow, we recently introduced Foundation Model Data Platform that expands programmatic data development beyond labeling for predictive AI with two core solutions: Snorkel GenFlow for building generative AI applications and Snorkel Foundry for developing custom LLMs with proprietary data.

Snorkel AI

JULY 14, 2023

In addition to the latest release of Snorkel Flow, we recently introduced Foundation Model Data Platform that expands programmatic data development beyond labeling for predictive AI with two core solutions: Snorkel GenFlow for building generative AI applications and Snorkel Foundry for developing custom LLMs with proprietary data.

ODSC - Open Data Science

OCTOBER 20, 2023

Build and productionize LLM models with ease with Dagster Pedram Navid | Head of Data Engineering and Developer Relations | Elementl/Dagster Labs During this session, you’ll discuss the role of orchestration in LLM training and deployment and the importance of an asset-centric framework in data engineering.

TransOrg Analytics

APRIL 21, 2024

Different Types of Generative AI Models Generative AI represents cutting-edge innovations that are transforming industries worldwide. Understanding the different types of Generative AI models is essential for navigating the evolving landscape of modern AI.

ODSC - Open Data Science

JULY 20, 2023

This open-source startup that specializes in neural networks has made a name for itself building a platform that allows organizations to train large language models. MosaicML is one of the pioneers of the private LLM market, making it possible for companies to harness the power of specialized AI to suit specific needs.

AWS Machine Learning Blog

AUGUST 8, 2024

Next, you need to index this data to make it available for a Retrieval Augmented Generation (RAG) approach where relevant passages are delivered with high accuracy to a large language model (LLM). Senthil Kamala Rathinam is a Solutions Architect at Amazon Web Services specializing in data and analytics.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content