The importance of data ingestion and integration for enterprise AI

IBM Journey to AI blog

JANUARY 9, 2024

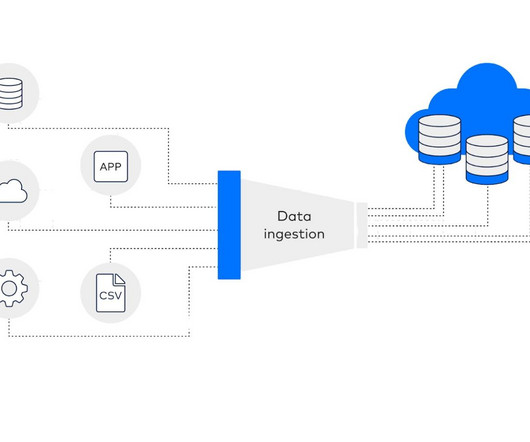

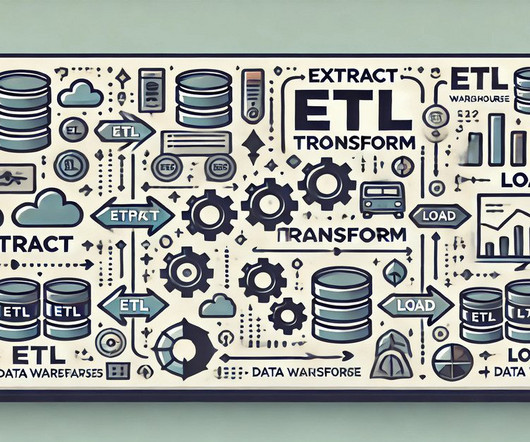

In the generative AI or traditional AI development cycle, data ingestion serves as the entry point. Here, raw data that is tailored to a company’s requirements can be gathered, preprocessed, masked and transformed into a format suitable for LLMs or other models. A popular method is extract, load, transform (ELT).

Let's personalize your content