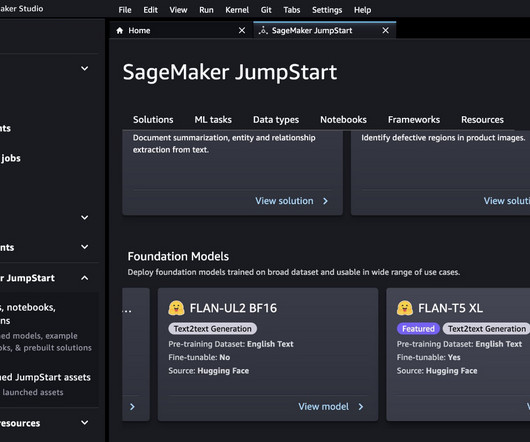

Seeking Faster, More Efficient AI? Meet FP6-LLM: the Breakthrough in GPU-Based Quantization for Large Language Models

Marktechpost

FEBRUARY 2, 2024

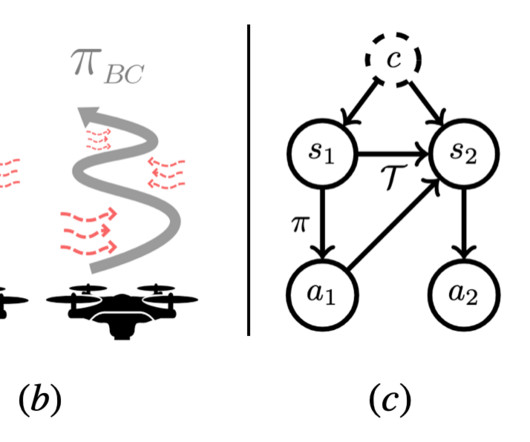

In computational linguistics and artificial intelligence, researchers continually strive to optimize the performance of large language models (LLMs). These models, renowned for their capacity to process a vast array of language-related tasks, face significant challenges due to their expansive size.

Let's personalize your content