Computational Linguistic Analysis of Engineered Chatbot Prompts

John Snow Labs

OCTOBER 10, 2023

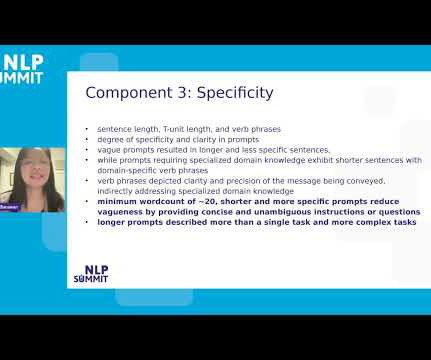

Therefore, it is important to analyze and understand the linguistic features of effective chatbot prompts for education. In this paper, we present a computational linguistic analysis of chatbot prompts used for education.

Let's personalize your content