This AI Paper from King’s College London Introduces a Theoretical Analysis of Neural Network Architectures Through Topos Theory

Marktechpost

APRIL 5, 2024

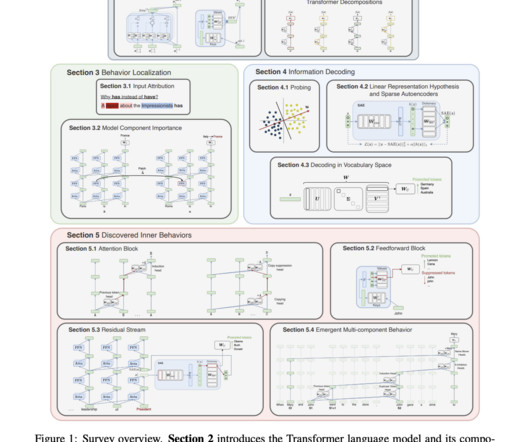

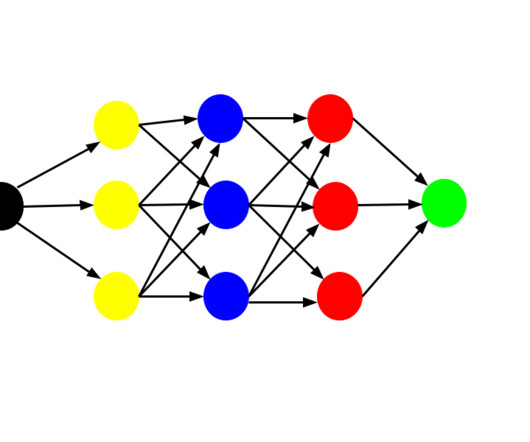

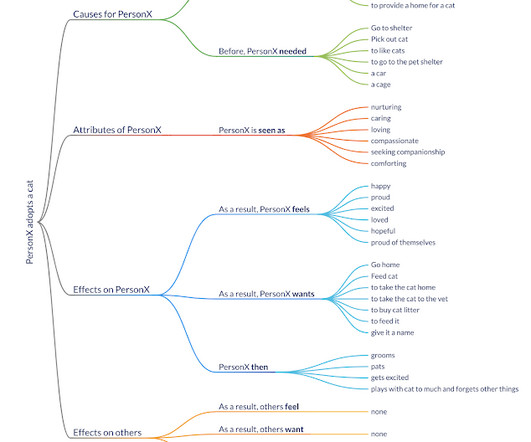

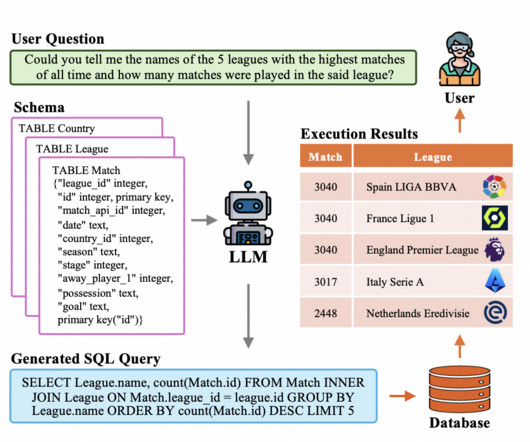

King’s College London researchers have highlighted the importance of developing a theoretical understanding of why transformer architectures, such as those used in models like ChatGPT, have succeeded in natural language processing tasks.

Let's personalize your content