This AI Paper from King’s College London Introduces a Theoretical Analysis of Neural Network Architectures Through Topos Theory

Marktechpost

APRIL 5, 2024

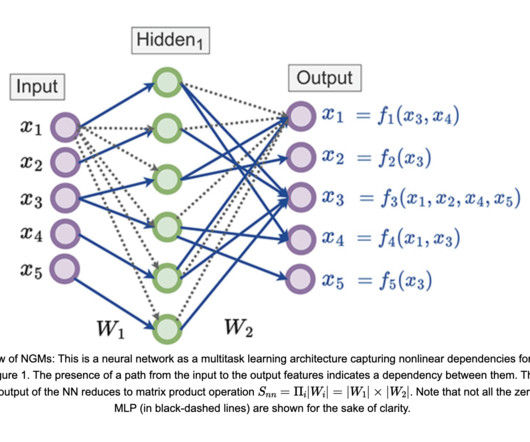

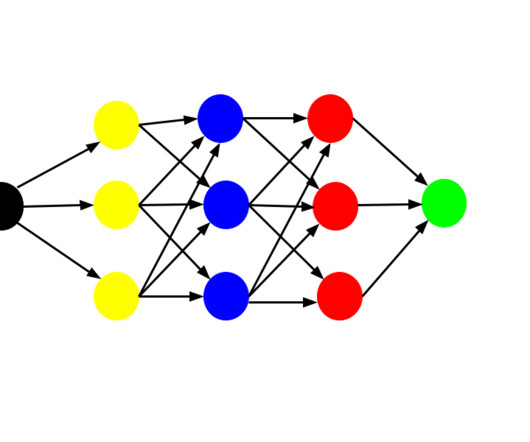

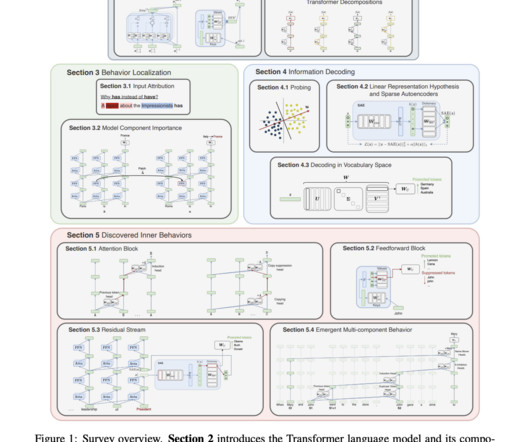

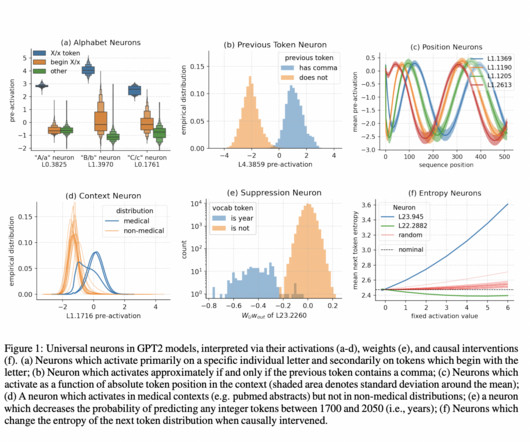

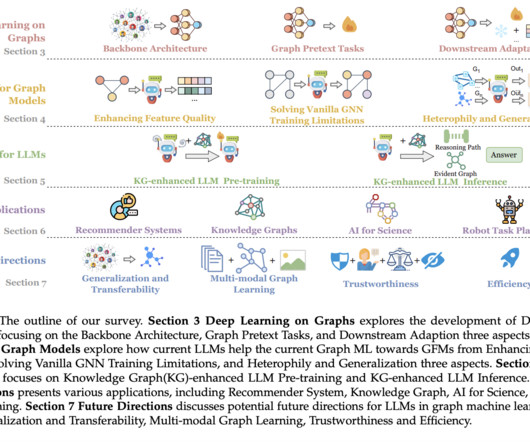

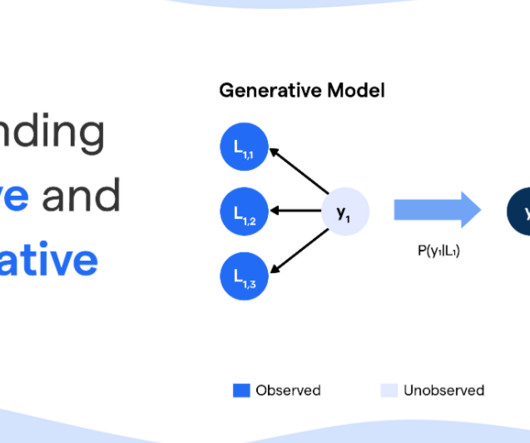

In their paper, the researchers aim to propose a theory that explains how transformers work, providing a definite perspective on the difference between traditional feedforward neural networks and transformers. Transformer architectures, exemplified by models like ChatGPT, have revolutionized natural language processing tasks.

Let's personalize your content