Learn Attention Models From Scratch

Analytics Vidhya

JUNE 26, 2023

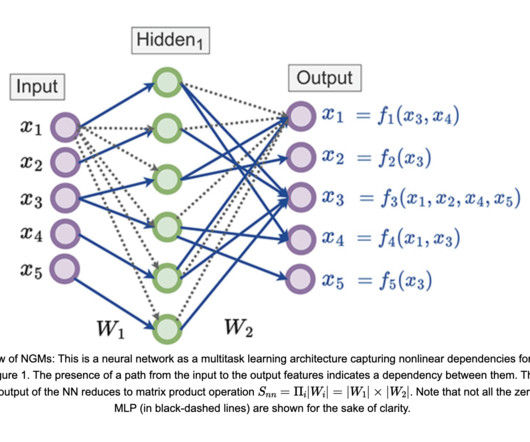

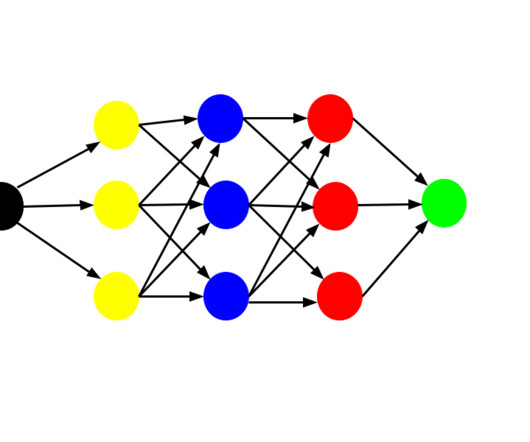

Introduction Attention models, also known as attention mechanisms, are input processing techniques used in neural networks. They allow the network to focus on different aspects of complex input individually until the entire data set is categorized.

Let's personalize your content