Comparing Tools For Data Processing Pipelines

The MLOps Blog

MARCH 15, 2023

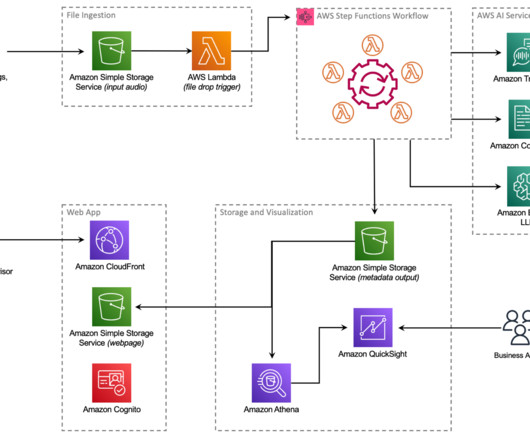

A typical data pipeline involves the following steps or processes through which the data passes before being consumed by a downstream process, such as an ML model training process. Data Ingestion : Involves raw data collection from origin and storage using architectures such as batch, streaming or event-driven.

Let's personalize your content