Good ETL Practices with Apache Airflow

Analytics Vidhya

NOVEMBER 30, 2021

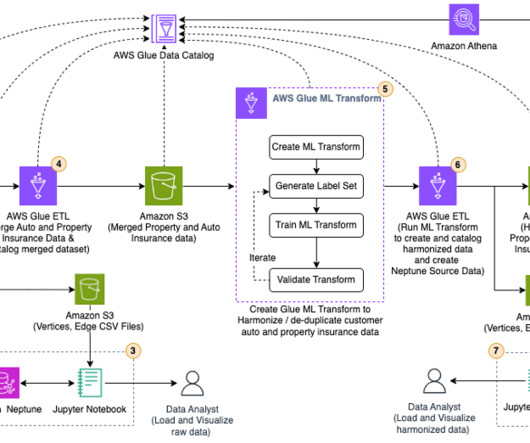

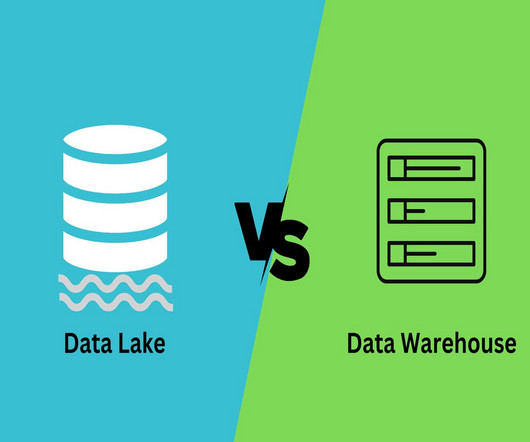

This article was published as a part of the Data Science Blogathon. Introduction to ETL ETL is a type of three-step data integration: Extraction, Transformation, Load are processing, used to combine data from multiple sources. It is commonly used to build Big Data.

Let's personalize your content