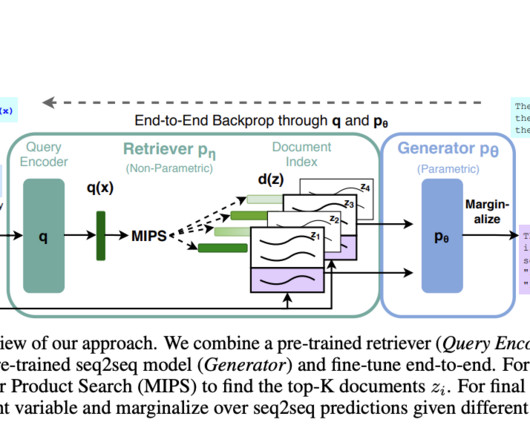

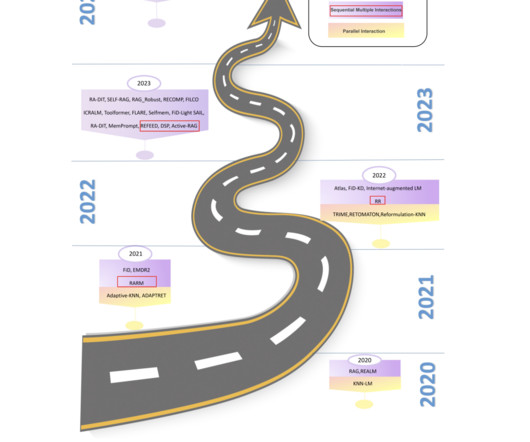

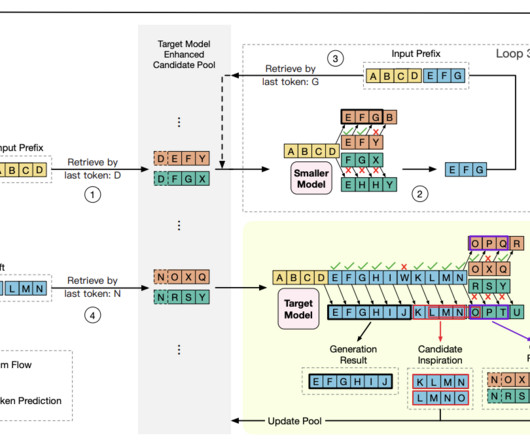

Combining the Best of Both Worlds: Retrieval-Augmented Generation for Knowledge-Intensive Natural Language Processing

Marktechpost

MAY 27, 2024

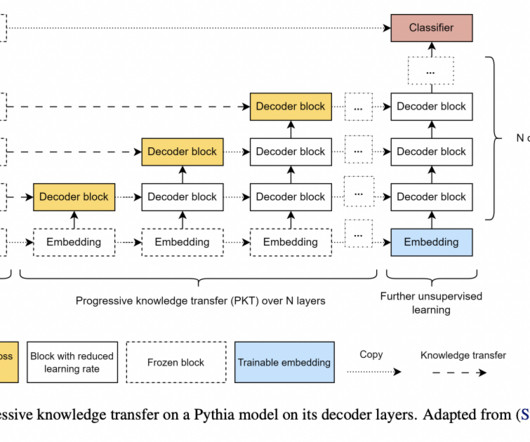

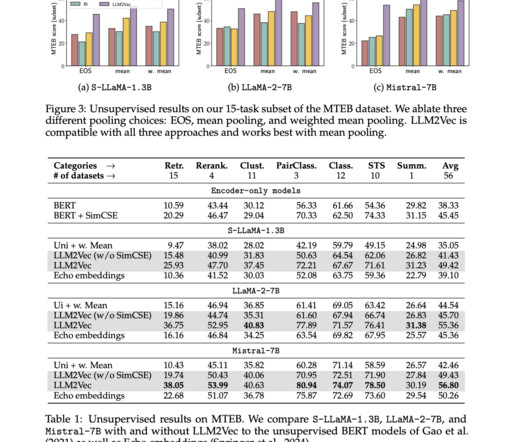

Knowledge-intensive Natural Language Processing (NLP) involves tasks requiring deep understanding and manipulation of extensive factual information. The primary challenge in knowledge-intensive NLP tasks is that large pre-trained language models need help accessing and manipulating knowledge precisely.

Let's personalize your content