Build Your Own RLHF LLM — Forget Human Labelers!

Towards AI

FEBRUARY 19, 2024

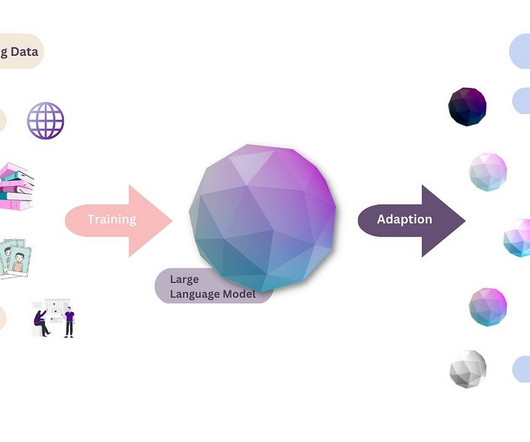

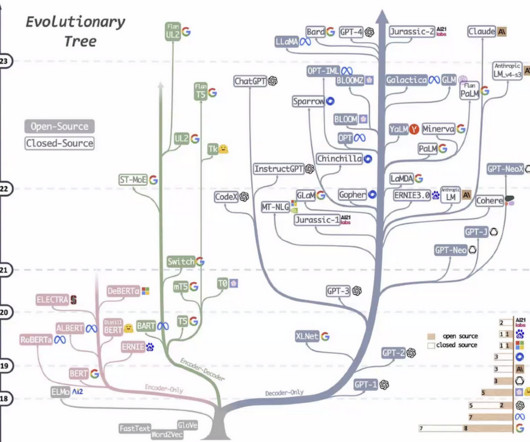

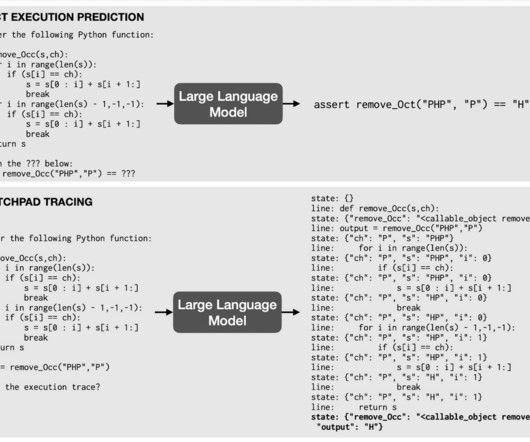

You know, that thing OpenAI used to make GPT3.5 As an early adopter of the BERT models in 2017, I hadn’t exactly been convinced computers could interpret human language with similar granularity and contextuality as people do. Author(s): Tim Cvetko Originally published on Towards AI. into ChatGPT? Forget Human Labelers!

Let's personalize your content