How to Use Gemma LLM?

Analytics Vidhya

FEBRUARY 27, 2024

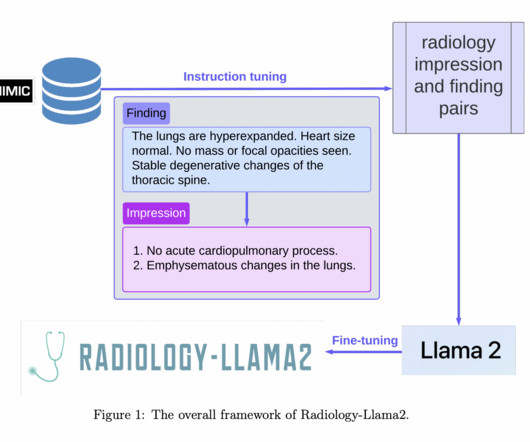

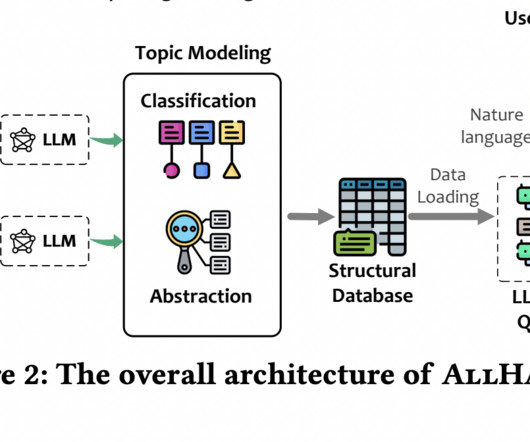

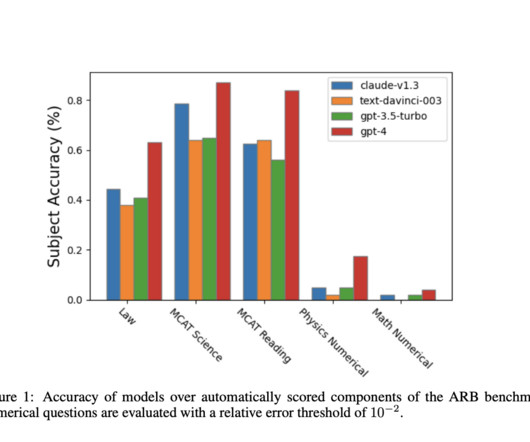

These models have achieved state-of-the-art results on different natural language processing tasks, including text summarization, machine translation, question answering, and dialogue generation. LLMs have even shown promise in more specialized domains, like healthcare, finance, and law.

Let's personalize your content