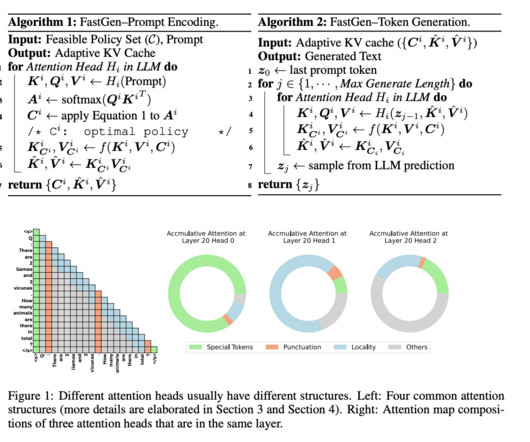

FastGen: Cutting GPU Memory Costs Without Compromising on LLM Quality

Marktechpost

MAY 12, 2024

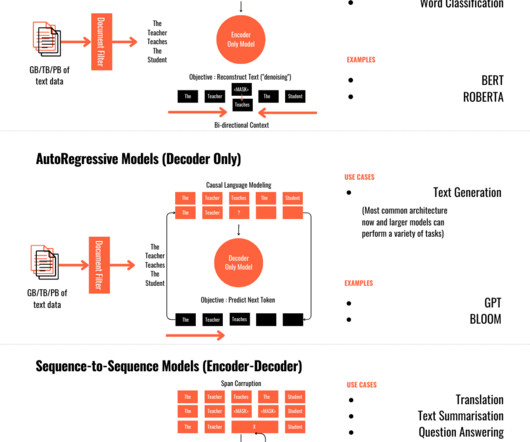

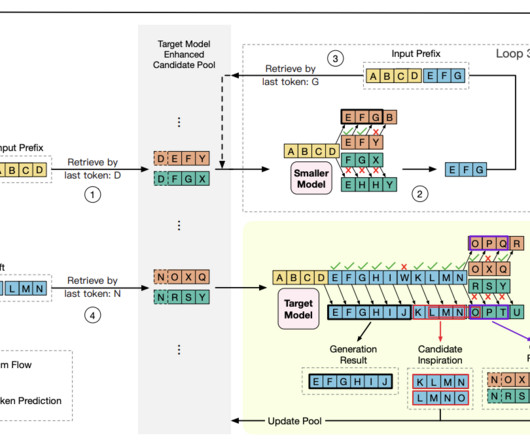

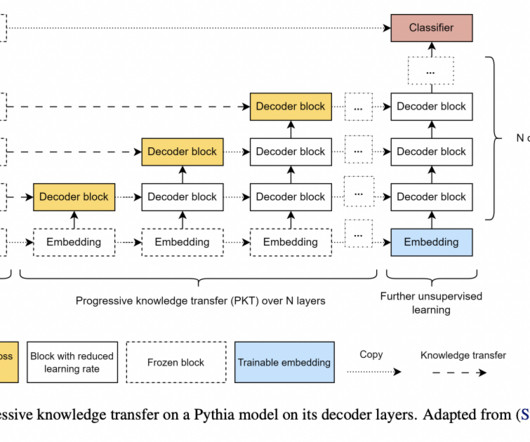

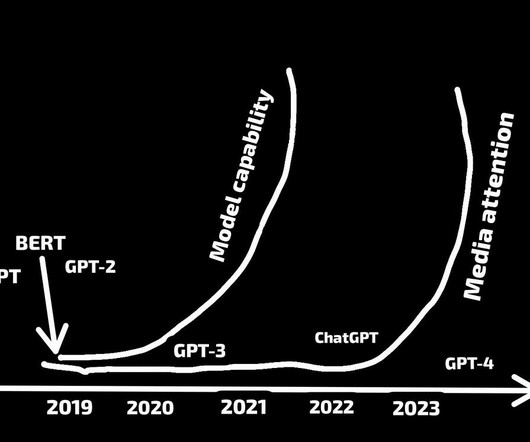

Many works have been carried out to enhance the model efficiency for LLMs, e.g., one such method is to skip multiple tokens at a particular time stamp. However, these models are only applied to non-autoregressive models and require an extra re-training phrase, making them less suitable for auto-regressive LLMs like ChatGPT and Llama.

Let's personalize your content