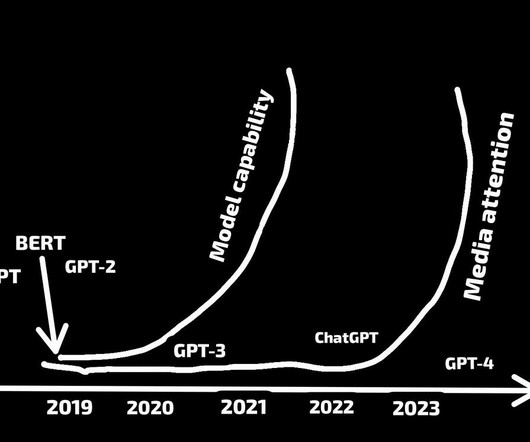

BERT Language Model and Transformers

Heartbeat

SEPTEMBER 11, 2023

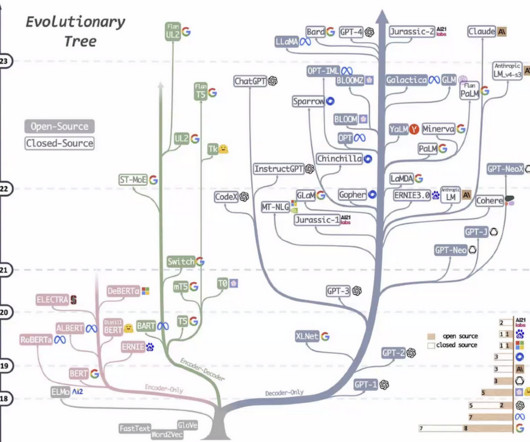

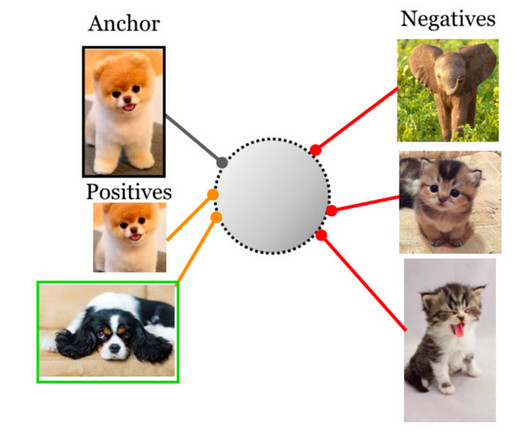

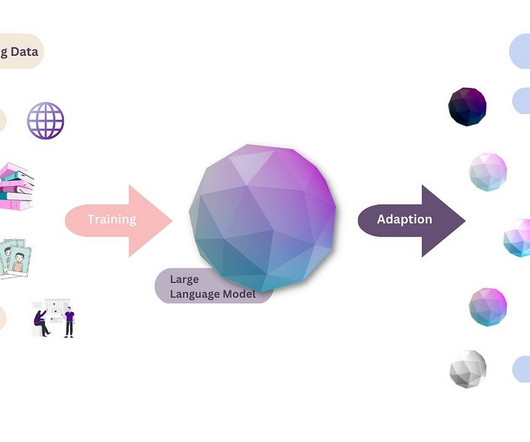

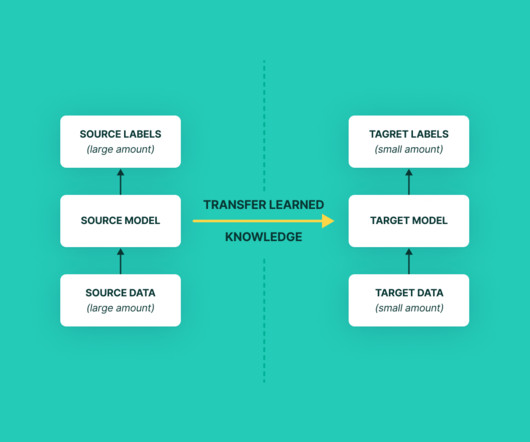

The following is a brief tutorial on how BERT and Transformers work in NLP-based analysis using the Masked Language Model (MLM). Introduction In this tutorial, we will provide a little background on the BERT model and how it works. The BERT model was pre-trained using text from Wikipedia. What is BERT? How Does BERT Work?

Let's personalize your content