Building Scalable AI Pipelines with MLOps: A Guide for Software Engineers

ODSC - Open Data Science

OCTOBER 7, 2024

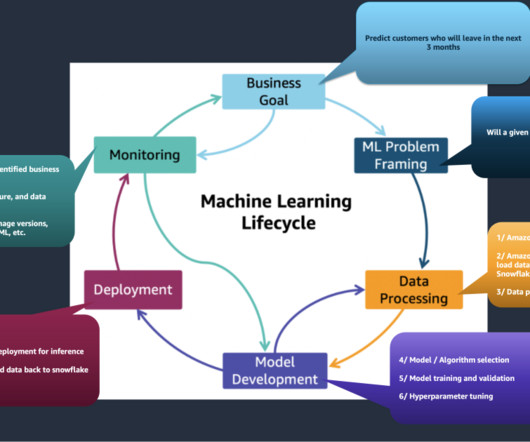

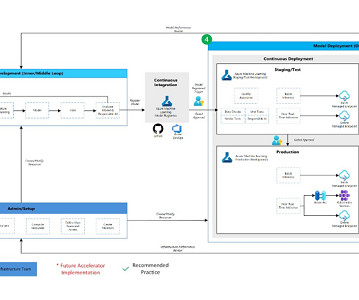

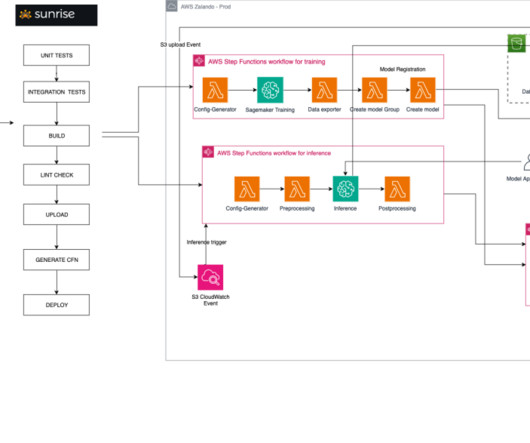

One of the key challenges in AI development is building scalable pipelines that can handle the complexities of modern data systems and models. These challenges range from managing large datasets to automating model deployment and monitoring for performance drift. As datasets grow, scalable data ingestion and storage become critical.

Let's personalize your content