Operationalizing Large Language Models: How LLMOps can help your LLM-based applications succeed

deepsense.ai

JULY 30, 2023

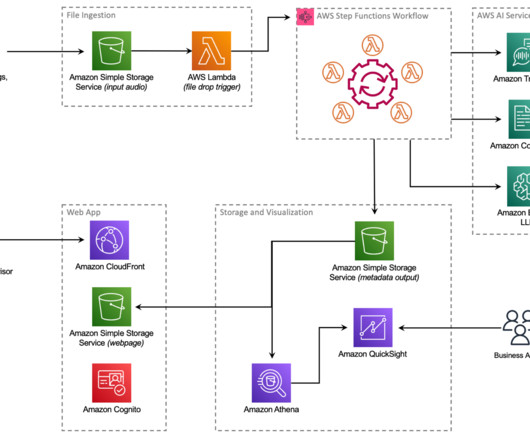

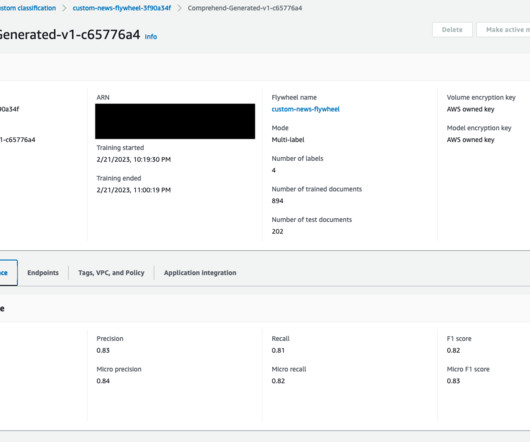

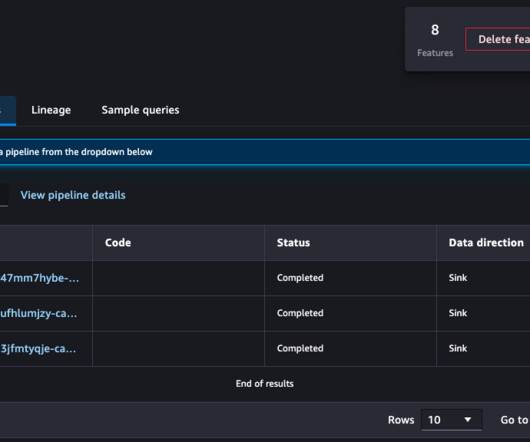

Other steps include: data ingestion, validation and preprocessing, model deployment and versioning of model artifacts, live monitoring of large language models in a production environment, monitoring the quality of deployed models and potentially retraining them. Of course, the desired level of automation is different for each project.

Let's personalize your content