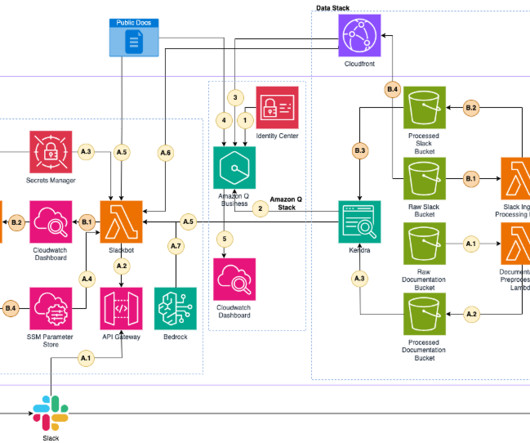

Amazon Q Business simplifies integration of enterprise knowledge bases at scale

FEBRUARY 11, 2025

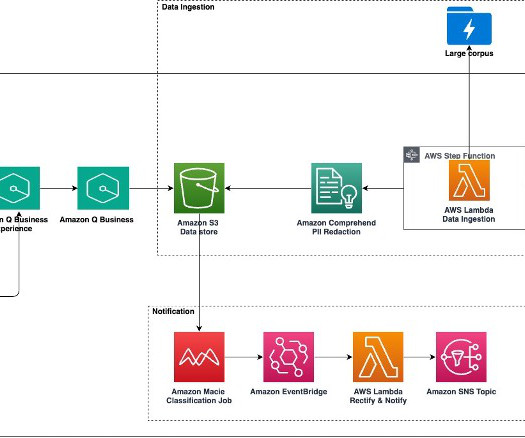

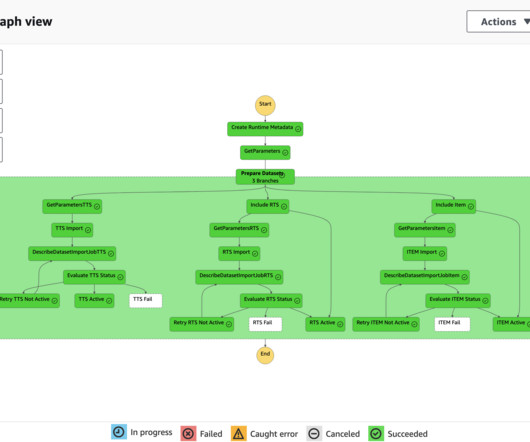

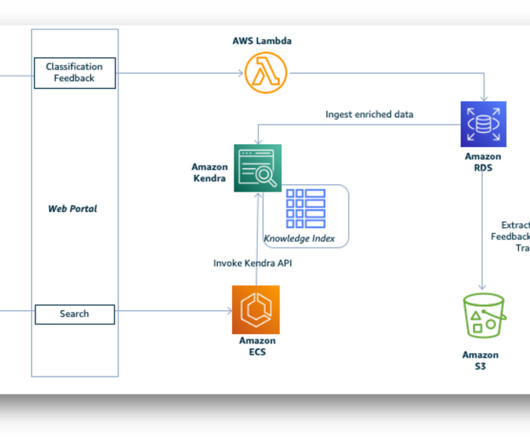

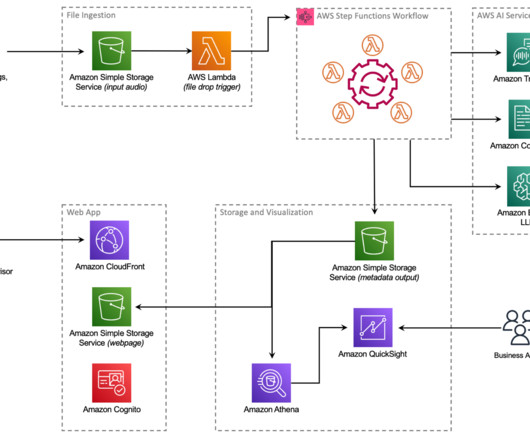

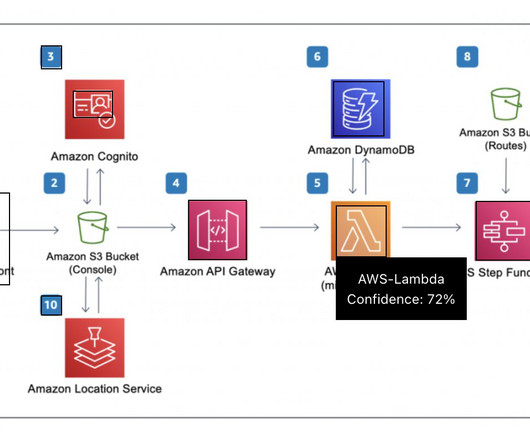

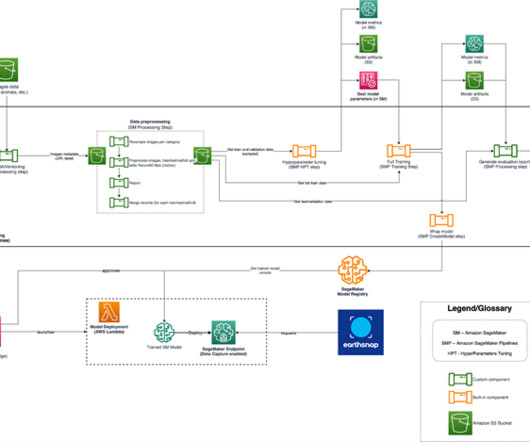

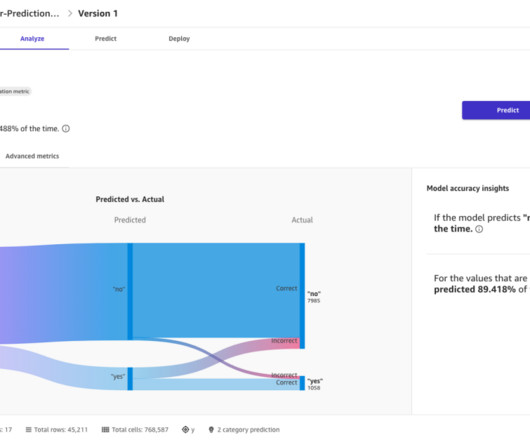

Amazon Q Business , a new generative AI-powered assistant, can answer questions, provide summaries, generate content, and securely complete tasks based on data and information in an enterprises systems. Large-scale data ingestion is crucial for applications such as document analysis, summarization, research, and knowledge management.

Let's personalize your content